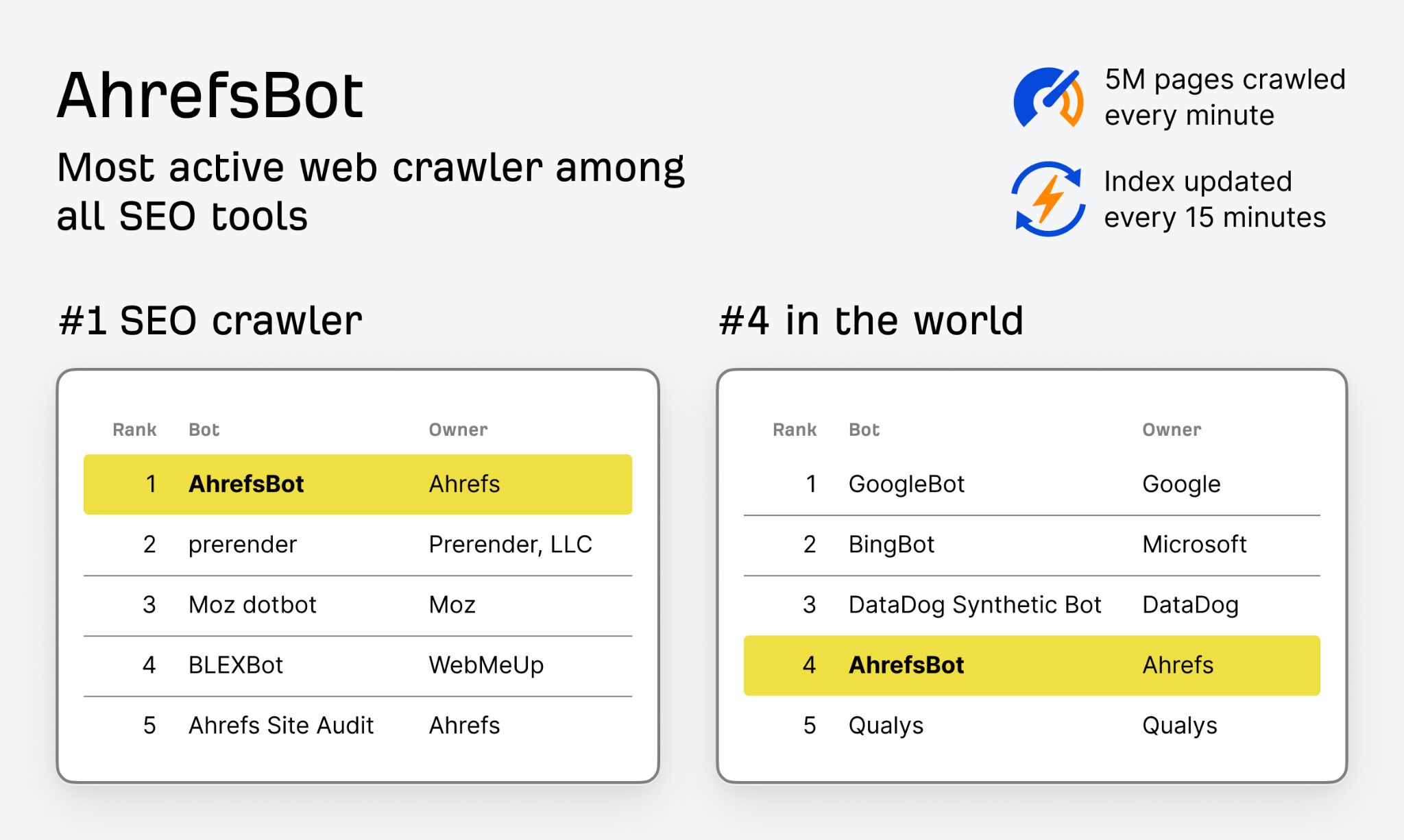

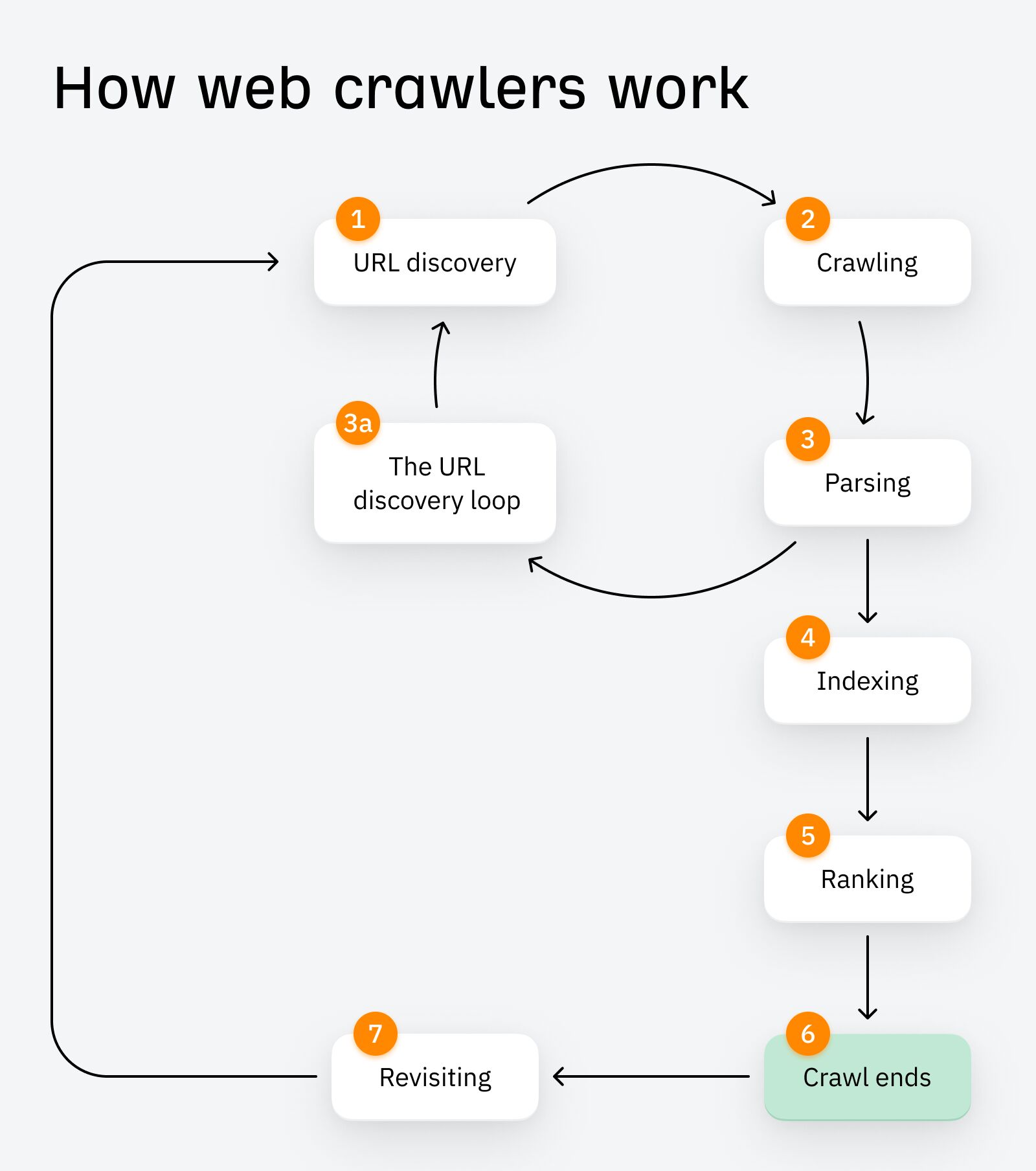

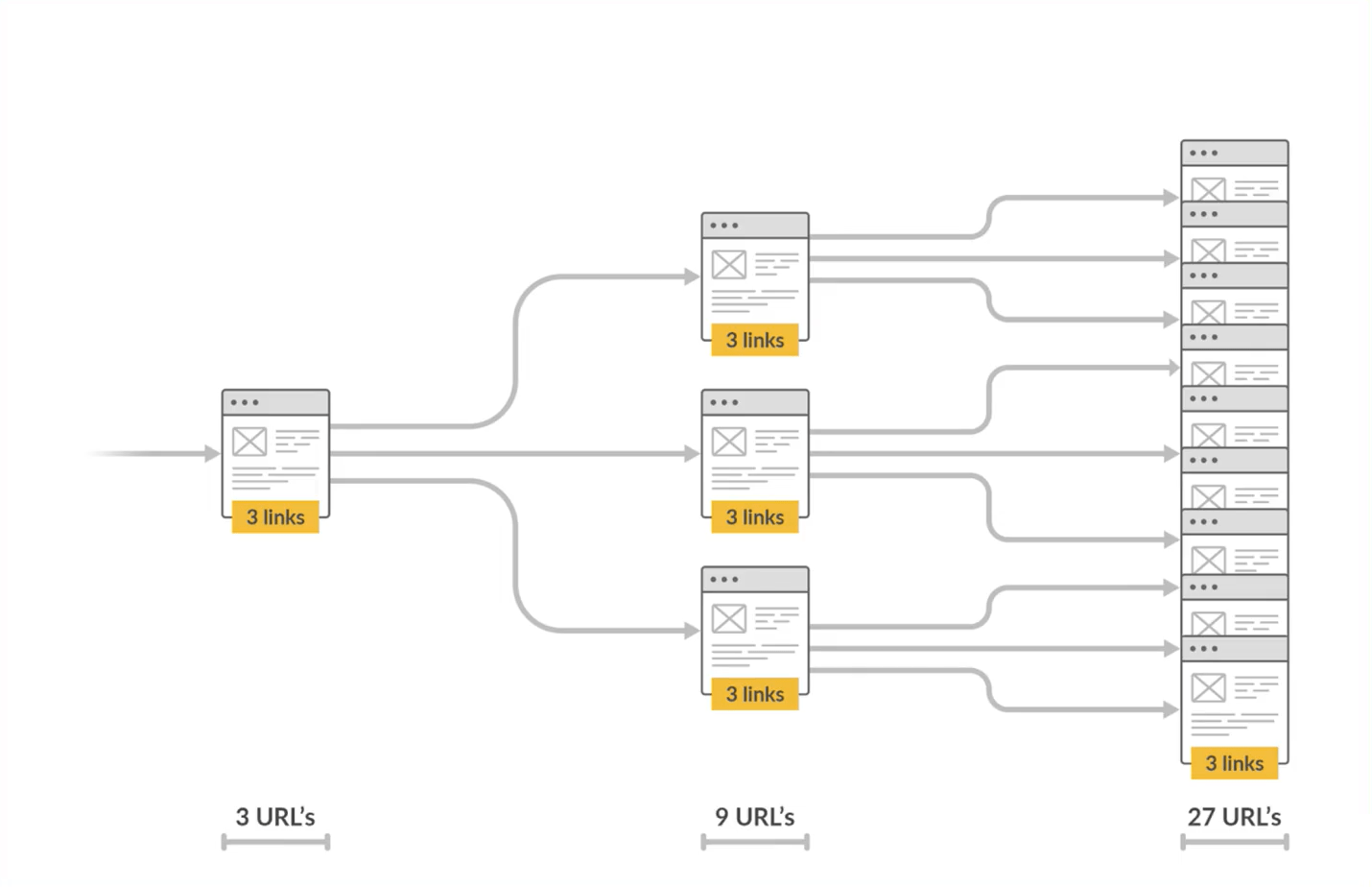

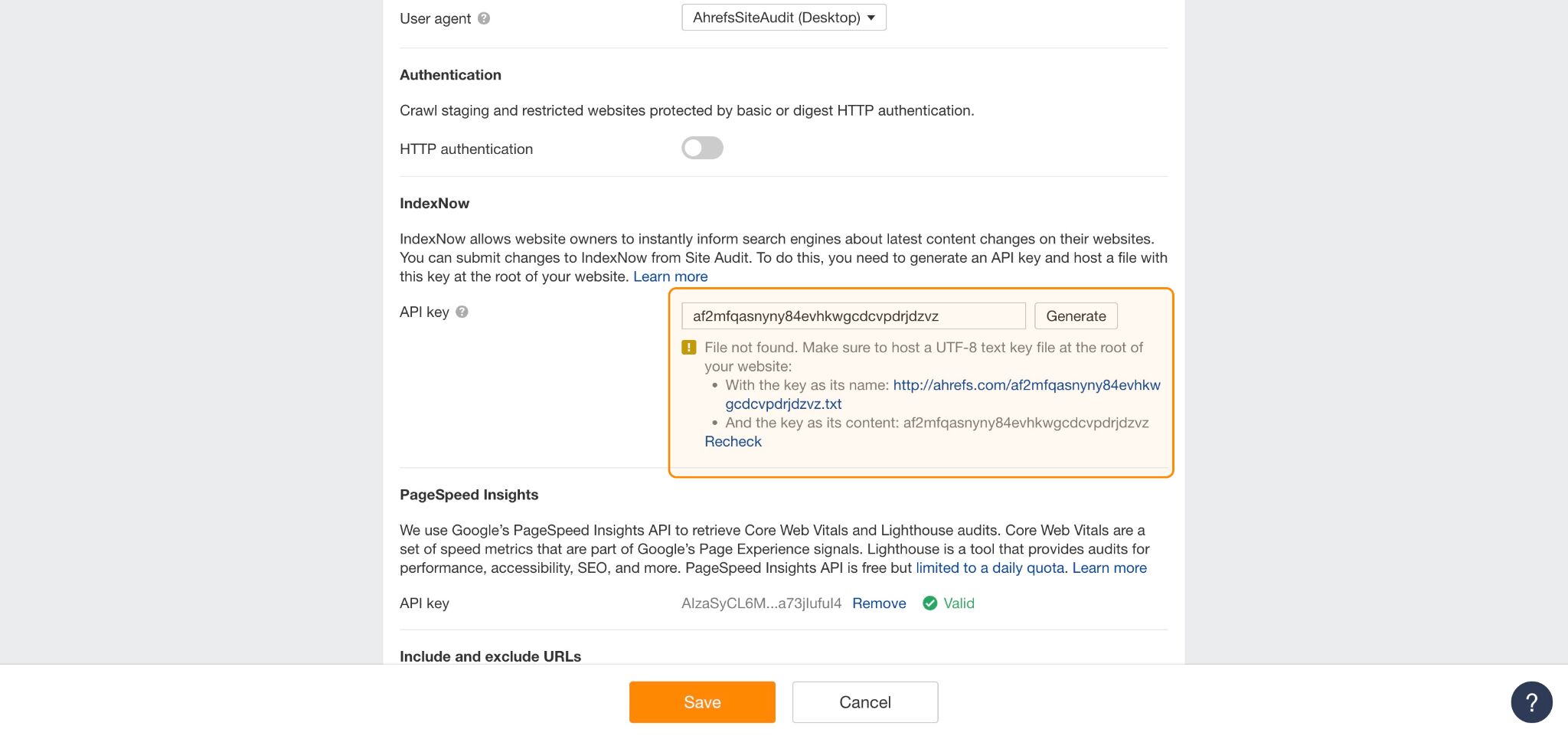

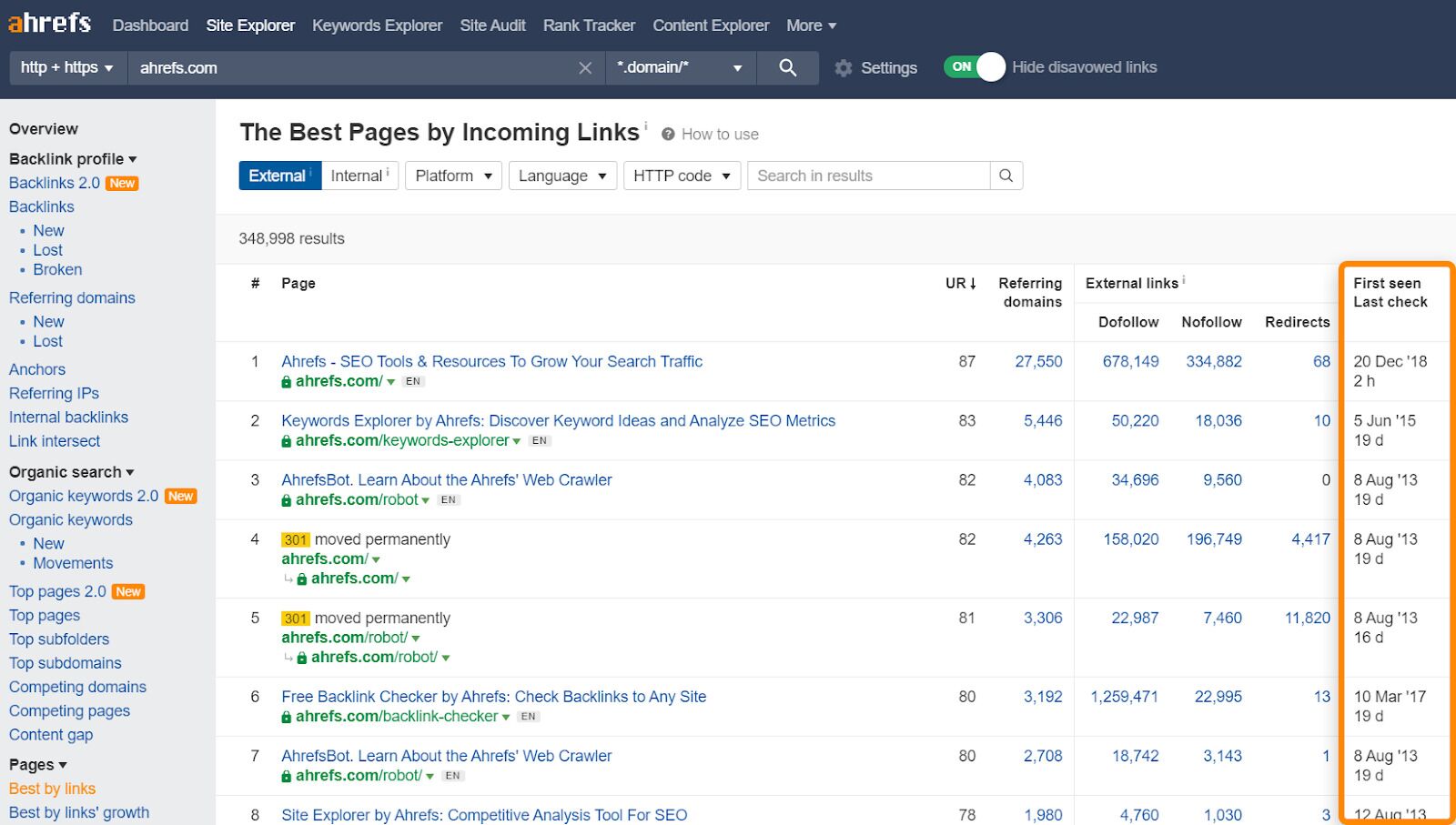

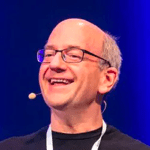

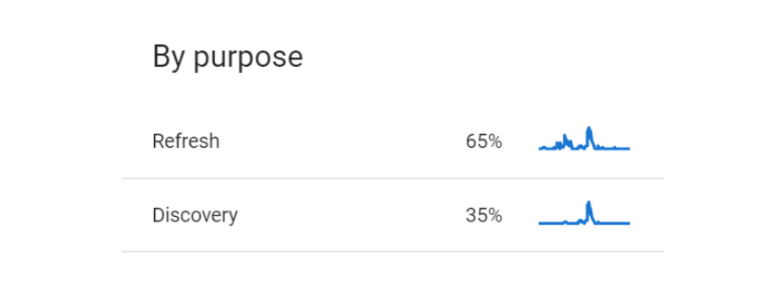

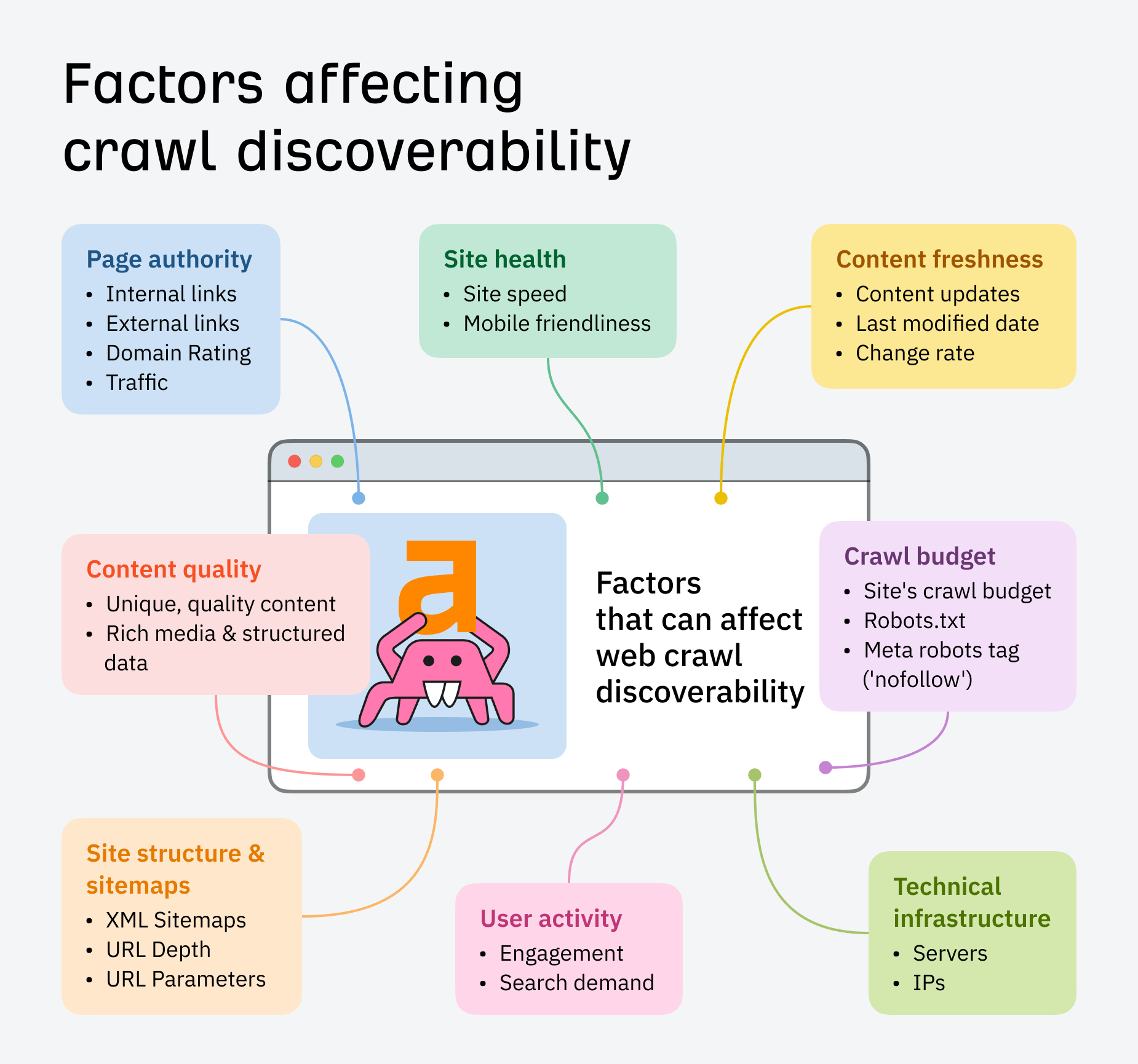

You mightiness person heard of website crawling earlier — you whitethorn adjacent person a vague thought of what it’s astir — but bash you cognize wherefore it’s important, oregon what differentiates it from web crawling? (yes, determination is simply a difference!) Search engines are progressively ruthless erstwhile it comes to the prime of the sites they let into the hunt results. If you don’t grasp the basics of optimizing for web crawlers (and eventual users), your integrated postulation whitethorn good wage the price. A bully website crawler tin amusement you however to support and adjacent heighten your site’s visibility. Here’s what you request to cognize astir some web crawlers and tract crawlers. A web crawler is simply a bundle programme oregon publication that automatically scours the internet, analyzing and indexing web pages. Also known arsenic a web spider oregon spiderbot, web crawlers measure a page’s contented to determine however to prioritize it successful their indexes. Googlebot, Google’s web crawler, meticulously browses the web, pursuing links from leafage to page, gathering data, and processing contented for inclusion successful Google’s hunt engine. Web crawlers analyse your leafage and determine however indexable oregon rankable it is, which yet determines your quality to thrust integrated traffic. If you privation to beryllium discovered successful hunt results, past it’s important you acceptable your contented for crawling and indexing. Did you know? AhrefsBot is simply a web crawler that: There are astir 7 stages to web crawling: When you people your leafage (e.g. to your sitemap), the web crawler discovers it and uses it arsenic a ‘seed’ URL. Just similar seeds successful the rhythm of germination, these starter URLs let the crawl and consequent crawling loops to begin. After URL discovery, your leafage is scheduled and past crawled. Content similar meta tags, images, links, and structured information are downloaded to the hunt engine’s servers, wherever they await parsing and indexing. Parsing fundamentally means analysis. The crawler bot extracts the information it’s conscionable crawled to find however to scale and fertile the page. Also during the parsing phase, but worthy of its ain subsection, is the URL find loop. This is erstwhile recently discovered links (including links discovered via redirects) are added to a queue of URLs for the crawler to visit. These are efficaciously caller ‘seed’ URLs, and steps 1–3 get repeated arsenic portion of the ‘URL find loop’. While caller URLs are being discovered, the archetypal URL gets indexed. Indexing is erstwhile hunt engines store the information collected from web pages. It enables them to rapidly retrieve applicable results for idiosyncratic queries. Indexed pages get ranked successful hunt engines based connected quality, relevance to hunt queries, and quality to conscionable definite different ranking factors. These pages are past served to users erstwhile they execute a search. Eventually the full crawl (including the URL rediscovery loop) ends based connected factors similar clip allocated, fig of pages crawled, extent of links followed etc. Crawlers periodically revisit the leafage to cheque for updates, caller content, oregon changes successful structure. As you tin astir apt guess, the fig of URLs discovered and crawled successful this process grows exponentially successful conscionable a few hops. Search motor web crawlers are autonomous, meaning you can’t trigger them to crawl oregon power them on/off at will. You can, however, notify crawlers of tract updates via: An XML sitemap is simply a record that lists each the important pages connected your website to assistance hunt engines accurately observe and scale your content. You tin inquire Google to see recrawling your tract contented via its URL inspection tool successful Google Search Console. You whitethorn get a connection successful GSC if Google knows astir your URL but hasn’t yet crawled oregon indexed it. If so, find retired how to hole “Discovered — presently not indexed”. Instead of waiting for bots to re-crawl and scale your content, you tin usage IndexNow to automatically ping hunt engines similar Bing, Yandex, Naver, Seznam.cz, and Yep, whenever you: You tin set up automatic IndexNow submissions via Ahrefs Site Audit. Search motor crawling decisions are dynamic and a little obscure. Although we don’t cognize the definitive criteria Google uses to find erstwhile oregon however often to crawl content, we’ve deduced 3 of the astir important areas. This is based connected breadcrumbs dropped by Google, some successful enactment documentation and during rep interviews. Google PageRank evaluates the fig and prime of links to a page, considering them arsenic “votes” of importance. Pages earning prime links are deemed much important and are ranked higher successful hunt results. PageRank is simply a foundational portion of Google’s algorithm. It makes consciousness past that the prime of your links and contented plays a large portion successful however your tract is crawled and indexed. To justice your site’s quality, Google looks astatine factors such as: To measure the pages connected your tract with the astir links, cheque retired the Best by Links report. Pay attraction to the “First seen”, “Last check” column, which reveals which pages person been crawled astir often, and when. According to Google’s Senior Search Analyst, John Mueller… Search engines recrawl URLs astatine antithetic rates, sometimes it’s aggregate times a day, sometimes it’s erstwhile each fewer months. But if you regularly update your content, you’ll spot crawlers dropping by more often. Search engines similar Google privation to present close and up-to-date accusation to stay competitory and relevant, truthful updating your contented is similar dangling a carrot connected a stick. You tin analyse conscionable however rapidly Google processes your updates by checking your crawl stats successful Google Search Console. While you’re there, look astatine the breakdown of crawling “By purpose” (i.e. percent divided of pages refreshed vs pages recently discovered). This volition besides assistance you enactment retired conscionable however often you’re encouraging web crawlers to revisit your site. To find circumstantial pages that request updating connected your site, caput to the Top Pages study successful Ahrefs Site Explorer, then: Top Pages shows you the contented connected your tract driving the astir integrated traffic. Pushing updates to these pages volition promote crawlers to sojourn your champion contented much often, and (hopefully) boost immoderate declining traffic. Offering a wide tract operation via a logical sitemap, and backing that up with applicable interior links volition assistance crawlers: Combined, these factors volition besides delight users, since they enactment casual navigation, reduced bounce rates, and accrued engagement. Below are immoderate much elements that tin perchance power however your tract gets discovered and prioritized successful crawling: What is crawl budget? Crawlers mimic the behaviour of quality users. Every clip they sojourn a web page, the site’s server gets pinged. Pages oregon sites that are hard to crawl volition incur errors and dilatory load times, and if a leafage is visited excessively often by a crawler bot, servers and webmasters volition artifact it for overusing resources. For this reason, each tract has a crawl budget, which is the fig of URLs a crawler can and wants to crawl. Factors similar tract speed, mobile-friendliness, and a logical tract operation interaction the efficacy of crawl budget. For a deeper dive into crawl budgets, cheque retired Patrick Stox’s guide: When Should You Worry About Crawl Budget? Web crawlers similar Google crawl the full internet, and you can’t power which sites they visit, oregon how often. But you can use website crawlers, which are similar your ain backstage bots. Ask them to crawl your website to find and hole important SEO problems, oregon survey your competitors’ site, turning their biggest weaknesses into your opportunities. Site crawlers fundamentally simulate hunt performance. They assistance you recognize however a hunt engine’s web crawlers mightiness construe your pages, based on their: The Ahrefs Site Audit crawler powers the tools: RankTracker, Projects, and Ahrefs’ main website crawling tool: Site Audit. Site Audit helps SEOs to: From URL find to revisiting, website crawlers run precise likewise to web crawlers – lone alternatively of indexing and ranking your leafage successful the SERPs, they store and analyse it successful their ain database. You tin crawl your tract either locally oregon remotely. Desktop crawlers similar ScreamingFrog fto you download and customize your tract crawl, portion cloud-based tools similar Ahrefs Site Audit execute the crawl without utilizing your computer’s resources – helping you enactment collaboratively connected fixes and tract optimization. If you privation to scan full websites successful existent clip to observe method SEO problems, configure a crawl successful Site Audit. It volition springiness you ocular information breakdowns, tract wellness scores, and elaborate hole recommendations to assistance you recognize however a hunt motor interprets your site. Navigate to the Site Audit tab and take an existing project, oregon set 1 up. A task is immoderate domain, subdomain, oregon URL you privation to way over time. Once you’ve configured your crawl settings – including your crawl docket and URL sources – you tin commencement your audit and you’ll beryllium notified arsenic soon arsenic it’s complete. Here are immoderate things you tin bash right away. The Top Issues overview successful Site Audit shows you your astir pressing errors, warnings, and notices, based connected the fig of URLs affected. Working done these arsenic portion of your SEO roadmap volition help you: 1. Spot errors (red icons) impacting crawling – e.g. 2. Optimize your contented and rankings based connected warnings (yellow) – e.g. 3. Maintain dependable visibility with notices (blue icon) – e.g. You tin besides prioritize fixes utilizing filters. Say you person thousands of pages with missing meta descriptions. Make the task much manageable and impactful by targeting precocious postulation pages first. Segment and zero-in connected the astir important pages connected your tract (e.g. subfolders oregon subdomains) utilizing Site Audit’s 200+ filters – whether that’s your blog, ecommerce store, oregon adjacent pages that gain implicit a definite postulation threshold. If you don’t person coding experience, past the imaginable of crawling your tract and implementing fixes tin beryllium intimidating. If you do have dev support, issues are easier to remedy, but past it becomes a substance of bargaining for different person’s time. We’ve got a caller diagnostic connected the mode to assistance you lick for these kinds of headaches. Coming soon, Patches are fixes you tin marque autonomously successful Site Audit. Title changes, missing meta descriptions, site-wide breached links – erstwhile you look these kinds of errors you tin deed “Patch it” to people a hole straight to your website, without having to pester a dev. And if you’re unsure of anything, you tin roll-back your patches astatine any point. Auditing your tract with a website crawler is arsenic overmuch astir spotting opportunities arsenic it is astir fixing bugs. The Internal Link Opportunities study successful Site Audit shows you applicable interior linking suggestions, by taking the apical 10 keywords (by traffic) for each crawled page, past looking for mentions of them connected your different crawled pages. ‘Source’ pages are the ones you should nexus from, and ‘Target’ pages are the ones you should nexus to. The much precocious prime connections you marque betwixt your content, the easier it volition beryllium for Googlebot to crawl your site. Understanding website crawling is much than conscionable an SEO hack – it’s foundational cognition that straight impacts your postulation and ROI. Knowing however crawlers enactment means knowing however hunt engines “see” your site, and that’s fractional the conflict erstwhile it comes to ranking.How bash web crawlers interaction SEO?

1. URL Discovery

2. Crawling

3. Parsing

3a. The URL Discovery Loop

4. Indexing

5. Ranking

6. Crawl ends

7. Revisiting

XML sitemaps

Google’s URL inspection tool

IndexNow

1. Prioritize quality

2. Keep things fresh

3. Refine your tract structure

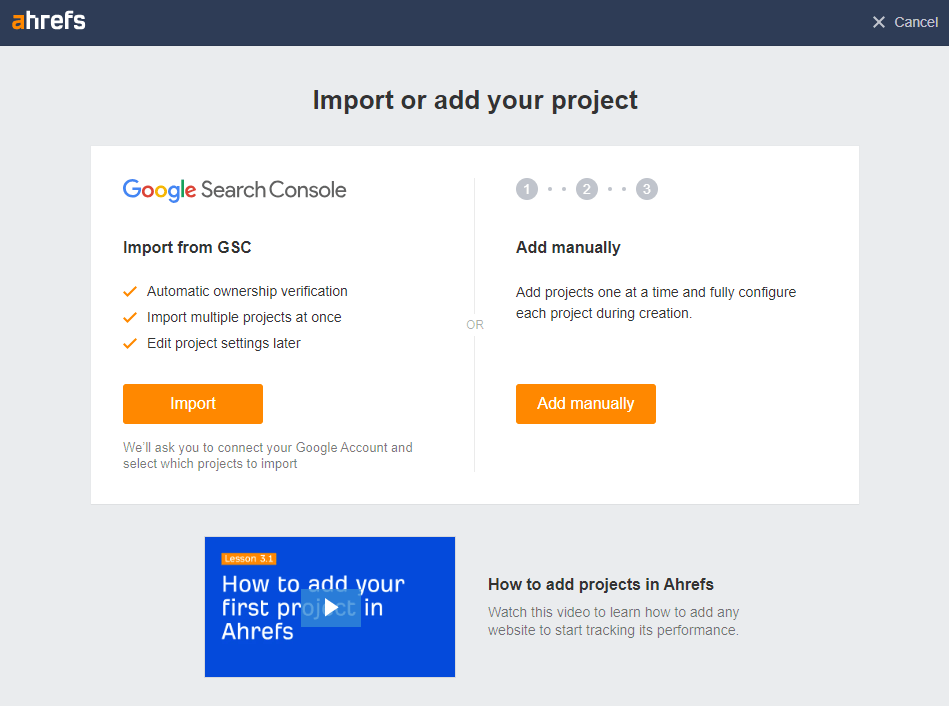

Example: Ahrefs Site Audit

1. Set up your crawl

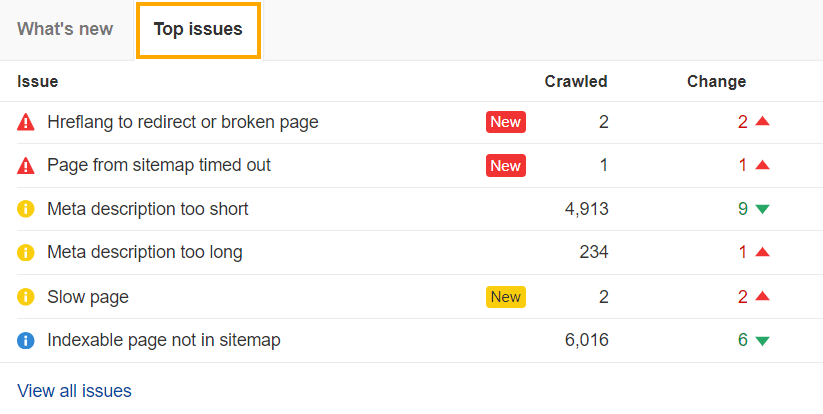

2. Diagnose apical errors

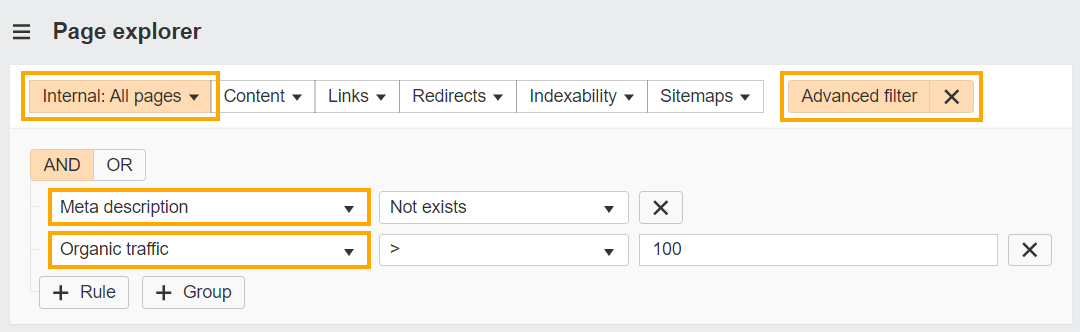

Filter issues

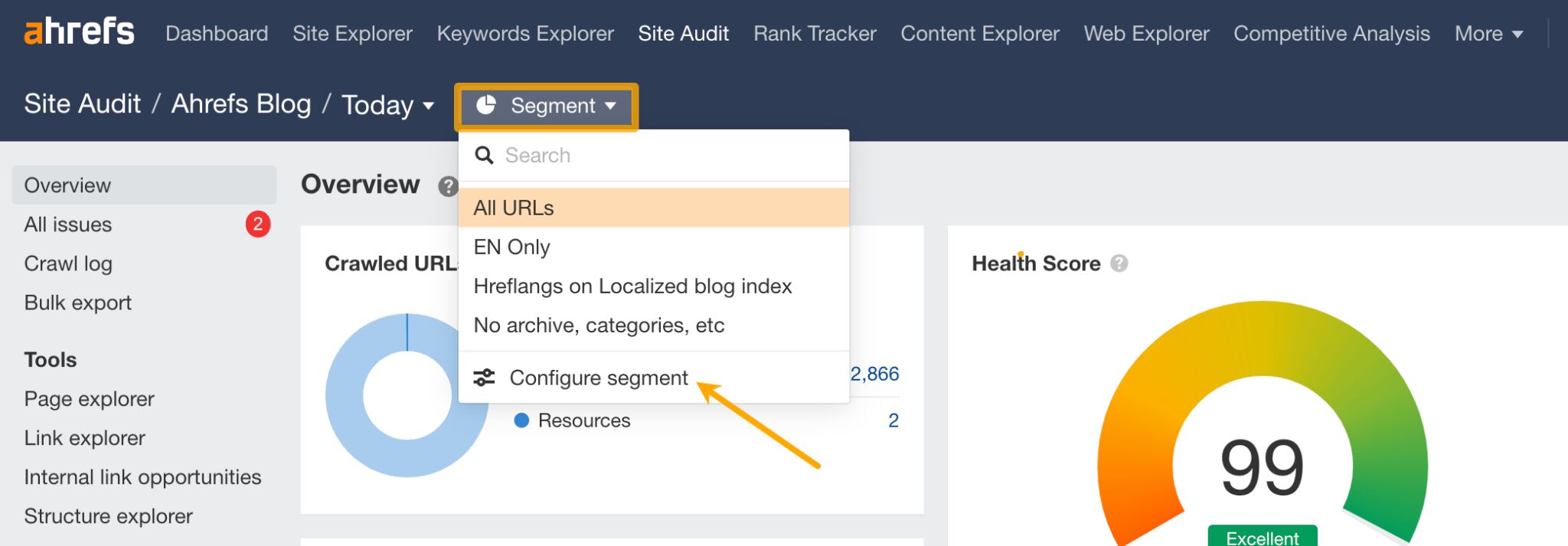

Crawl the astir important parts of your site

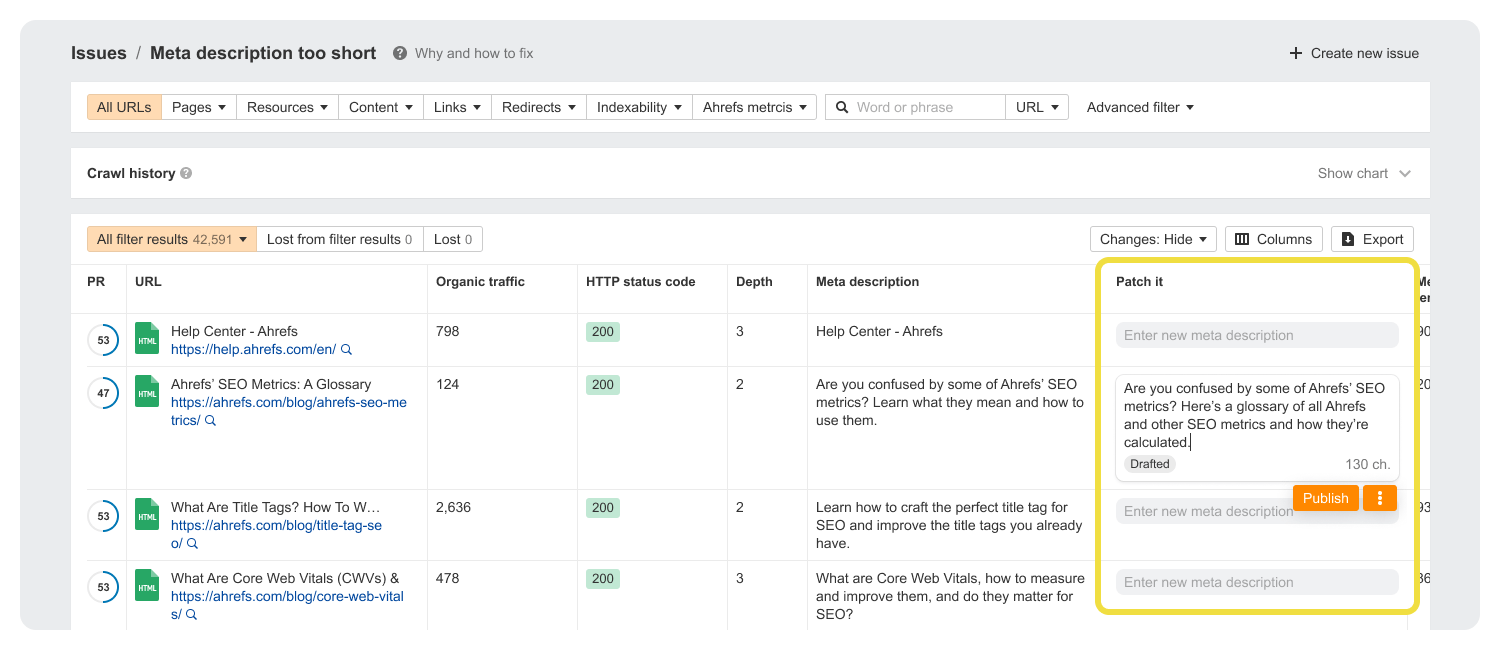

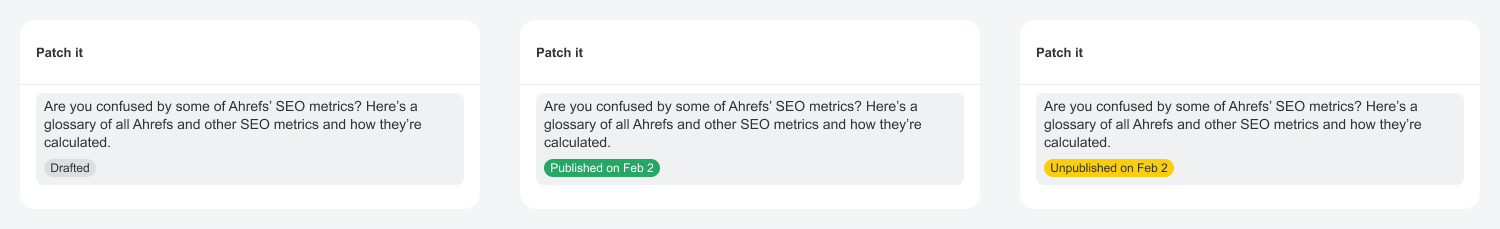

3. Expedite fixes

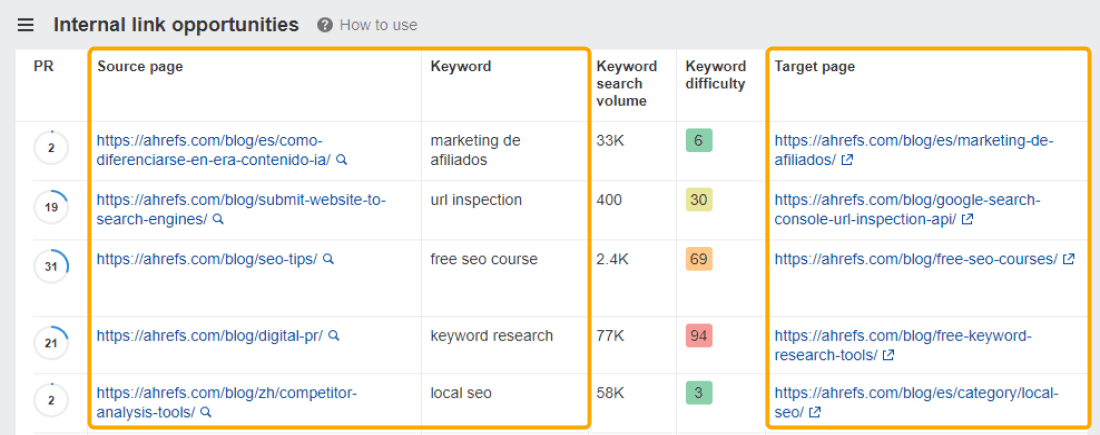

4. Spot optimization opportunities

Improve interior linking

Final thoughts