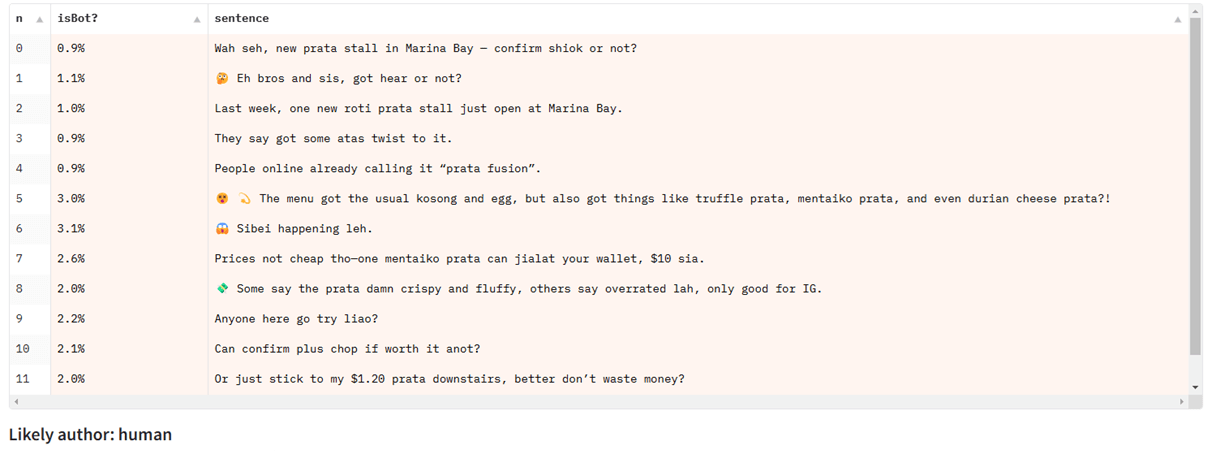

There are tons of tools promising that they tin archer AI contented from quality content, but until recently, I thought they didn’t work. AI-generated content isn’t arsenic elemental to spot arsenic old-fashioned “spun” oregon plagiarised content. Most AI-generated substance could beryllium considered original, successful immoderate sense—it isn’t copy-pasted from determination other connected the internet. But arsenic it turns out, we’re gathering an AI contented detector astatine Ahrefs. So to recognize however AI contented detectors work, I interviewed idiosyncratic who really understands the subject and probe down them: Yong Keong Yap, a information idiosyncratic astatine Ahrefs and portion of our instrumentality learning team. Further reading All AI contented detectors enactment successful the aforesaid basal way: they look for patterns oregon abnormalities successful substance that look somewhat antithetic from those successful human-written text. To bash that, you request 2 things: tons of examples of some human-written and LLM-written substance to compare, and a mathematical exemplary to usage for the analysis. There are 3 communal approaches in use: Attempts to observe machine-generated penning person been astir since the 2000s. Some of these older detection methods inactive enactment well today. Statistical detection methods enactment by counting peculiar penning patterns to separate betwixt human-written substance and machine-generated text, like: If these patterns are precise antithetic from those recovered successful human-generated texts, there’s a bully accidental you’re looking astatine machine-generated text. These methods are precise lightweight and computationally efficient, but they thin to interruption erstwhile the substance is manipulated (using what machine scientists telephone “adversarial examples”). Statistical methods tin beryllium made much blase by grooming a learning algorithm connected apical of these counts (like Naive Bayes, Logistic Regression, oregon Decision Trees), oregon utilizing methods to number connection probabilities (known arsenic logits). Neural networks are machine systems that loosely mimic however the quality encephalon works. They incorporate artificial neurons, and done signifier (known arsenic training), the connections betwixt the neurons set to get amended astatine their intended goal. In this way, neural networks tin beryllium trained to observe text generated by other neural networks. Neural networks person go the de-facto method for AI contented detection. Statistical detection methods necessitate peculiar expertise successful the people taxable and connection to enactment (what machine scientists telephone “feature extraction”). Neural networks conscionable necessitate substance and labels, and they tin larn what is and isn’t important themselves. Even tiny models tin bash a bully occupation astatine detection, arsenic agelong arsenic they’re trained with capable information (at slightest a fewer 1000 examples, according to the literature), making them inexpensive and dummy-proof, comparative to different methods. LLMs (like ChatGPT) are neural networks, but without further fine-tuning, they mostly aren’t precise bully astatine identifying AI-generated text—even if the LLM itself generated it. Try it yourself: make immoderate substance with ChatGPT and successful different chat, inquire it to place whether it’s human- oregon AI-generated. Here’s o1 failing to recognise its ain output: Watermarking is different attack to AI contented detection. The thought is to get an LLM to make substance that includes a hidden signal, identifying it arsenic AI-generated. Think of watermarks similar UV ink connected insubstantial wealth to easy separate authentic notes from counterfeits. These watermarks thin to beryllium subtle to the oculus and not easy detected oregon replicated—unless you cognize what to look for. If you picked up a measure successful an unfamiliar currency, you would beryllium hard-pressed to place each the watermarks, fto unsocial recreate them. Based connected the lit cited by Junchao Wu, determination are 3 ways to watermark AI-generated text: This detection method evidently relies connected researchers and model-makers choosing to watermark their information and exemplary outputs. If, for example, GPT-4o’s output was watermarked, it would beryllium casual for OpenAI to usage the corresponding “UV light” to enactment retired whether the generated substance came from their model. But determination mightiness beryllium broader implications too. One very caller paper suggests that watermarking tin marque it easier for neural web detection methods to work. If a exemplary is trained connected adjacent a tiny magnitude of watermarked text, it becomes “radioactive” and its output easier to observe arsenic machine-generated. In the lit review, galore methods managed detection accuracy of astir 80%, oregon greater successful some cases. That sounds beauteous reliable, but determination are 3 large issues that mean this accuracy level isn’t realistic successful galore real-life situations. Most AI detectors are trained and tested connected a peculiar type of writing, similar quality articles oregon societal media content. That means that if you privation to trial a selling blog post, and you usage an AI detector trained connected selling content, past it’s apt to beryllium reasonably accurate. But if the detector was trained connected quality content, oregon connected originative fiction, the results would beryllium acold little reliable. Yong Keong Yap is Singaporean, and shared the illustration of chatting with ChatGPT successful Singlish, a Singaporean assortment of English that incorporates elements of different languages, similar Malay and Chinese: When investigating Singlish substance connected a detection exemplary trained chiefly connected quality articles, it fails, contempt performing good for different types of English text: Almost each of the AI detection benchmarks and datasets are focused connected sequence classification: that is, detecting whether oregon not an full assemblage of substance is machine-generated. But galore real-life uses for AI substance impact a substance of AI-generated and human-written substance (say, utilizing an AI generator to assistance constitute oregon edit a blog station that is partially human-written). This benignant of partial detection (known arsenic span classification or token classification) is simply a harder occupation to lick and has little attraction fixed to it successful unfastened literature. Current AI detection models bash not grip this mounting well. Humanizing tools enactment by disrupting patterns that AI detectors look for. LLMs, successful general, constitute fluently and politely. If you intentionally adhd typos, grammatical errors, oregon adjacent hateful contented to generated text, you tin usually trim the accuracy of AI detectors. These examples are elemental “adversarial manipulations” designed to interruption AI detectors, and they’re usually evident adjacent to the quality eye. But blase humanizers tin spell further, utilizing different LLM that is finetuned specifically successful a loop with a known AI detector. Their extremity is to support high-quality substance output portion disrupting the predictions of the detector. These tin marque AI-generated substance harder to detect, arsenic agelong arsenic the humanizing instrumentality has entree to detectors that it wants to interruption (in bid to bid specifically to decision them). Humanizers whitethorn neglect spectacularly against new, chartless detectors. To summarize, AI contented detectors tin beryllium precise close in the close circumstances. To get utile results from them, it’s important to travel a fewer guiding principles: Since the detonation of the archetypal atomic bombs successful the 1940s, each azygous portion of alloy smelted anyplace successful the satellite has been contaminated by atomic fallout. Steel manufactured earlier the atomic epoch is known arsenic “low-background steel”, and it’s beauteous important if you’re gathering a Geiger antagonistic oregon a particle detector. But this contamination-free alloy is becoming rarer and rarer. Today’s main sources are aged shipwrecks. Soon, it whitethorn beryllium all gone. This analogy is applicable for AI contented detection. Today’s methods trust heavy connected entree to a bully root of modern, human-written content. But this root is becoming smaller by the day. As AI is embedded into societal media, connection processors, and email inboxes, and caller models are trained connected information that includes AI-generated text, it’s casual to ideate a satellite wherever astir contented is “tainted” with AI-generated material. In that world, it mightiness not marque overmuch consciousness to deliberation astir AI detection—everything volition beryllium AI, to a greater oregon lesser extent. But for now, you tin astatine slightest usage AI contented detectors equipped with the cognition of their strengths and weaknesses.1. Statistical detection (old schoolhouse but inactive effective)

Example textWord frequenciesN-gram frequenciesSyntactic structuresStylistic notes “The feline sat connected the mat. Then the feline yawned.” the: 3

cat: 2

sat: 1

on: 1

mat: 1

then: 1

yawned: 1Bigrams

“the cat”: 2

“cat sat”: 1

“sat on”: 1

“on the”: 1

“the mat”: 1

“then the”: 1

“cat yawned”: 1Contains S-V (Subject-Verb) pairs specified arsenic “the feline sat” and “the feline yawned.” Third-person viewpoint; neutral tone. 2. Neural networks (trendy heavy learning methods)

3. Watermarking (hidden signals successful LLM output)

Most detection models are trained connected precise constrictive datasets

They conflict with partial detection

They’re susceptible to humanizing tools

Final thoughts