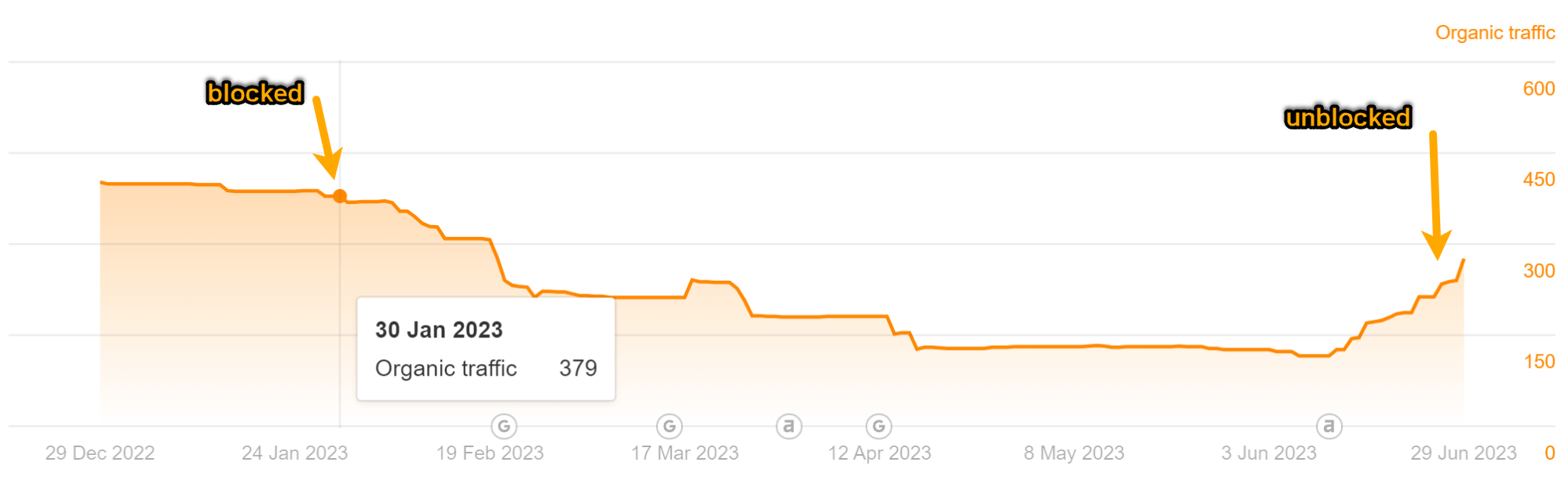

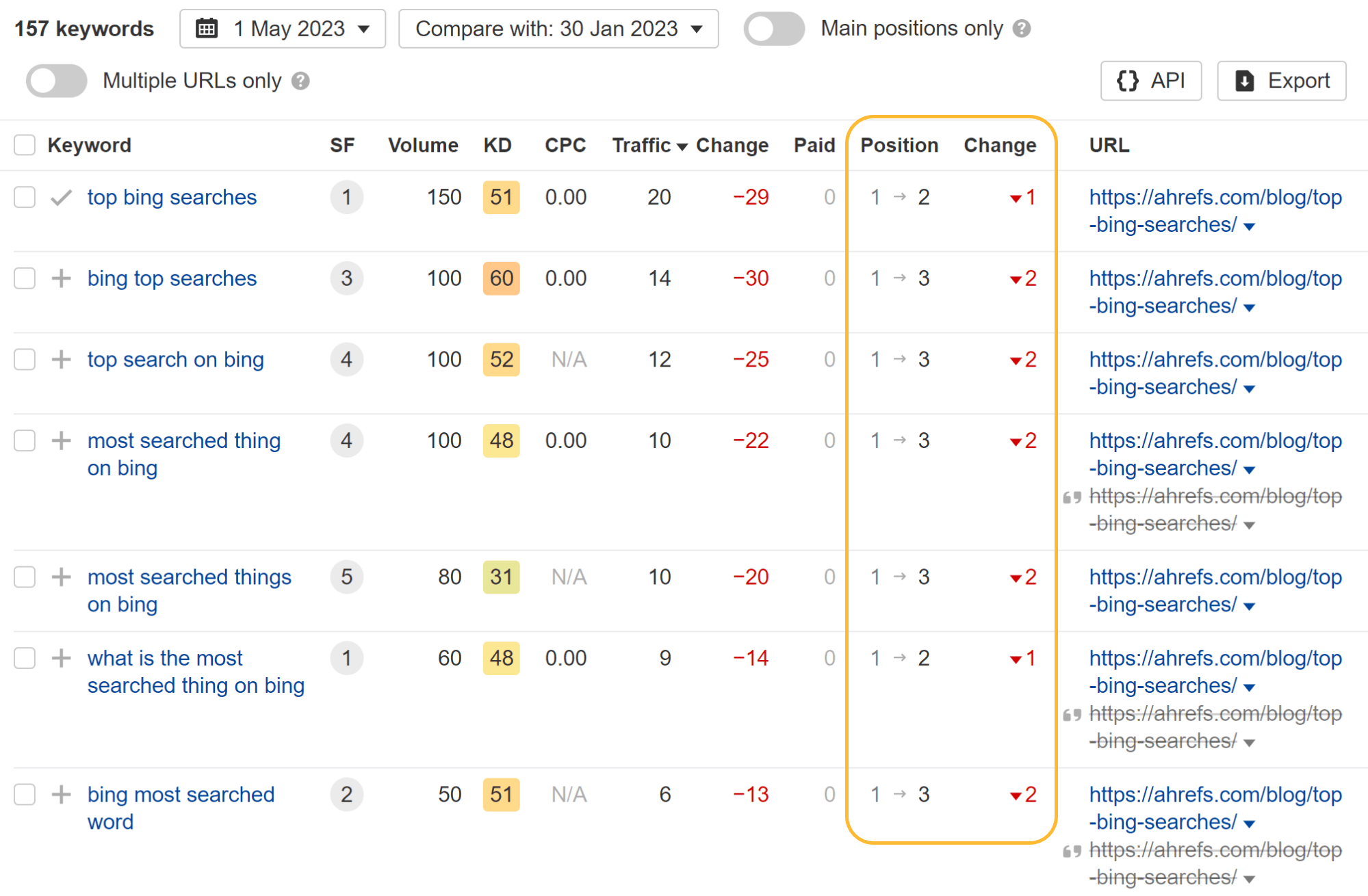

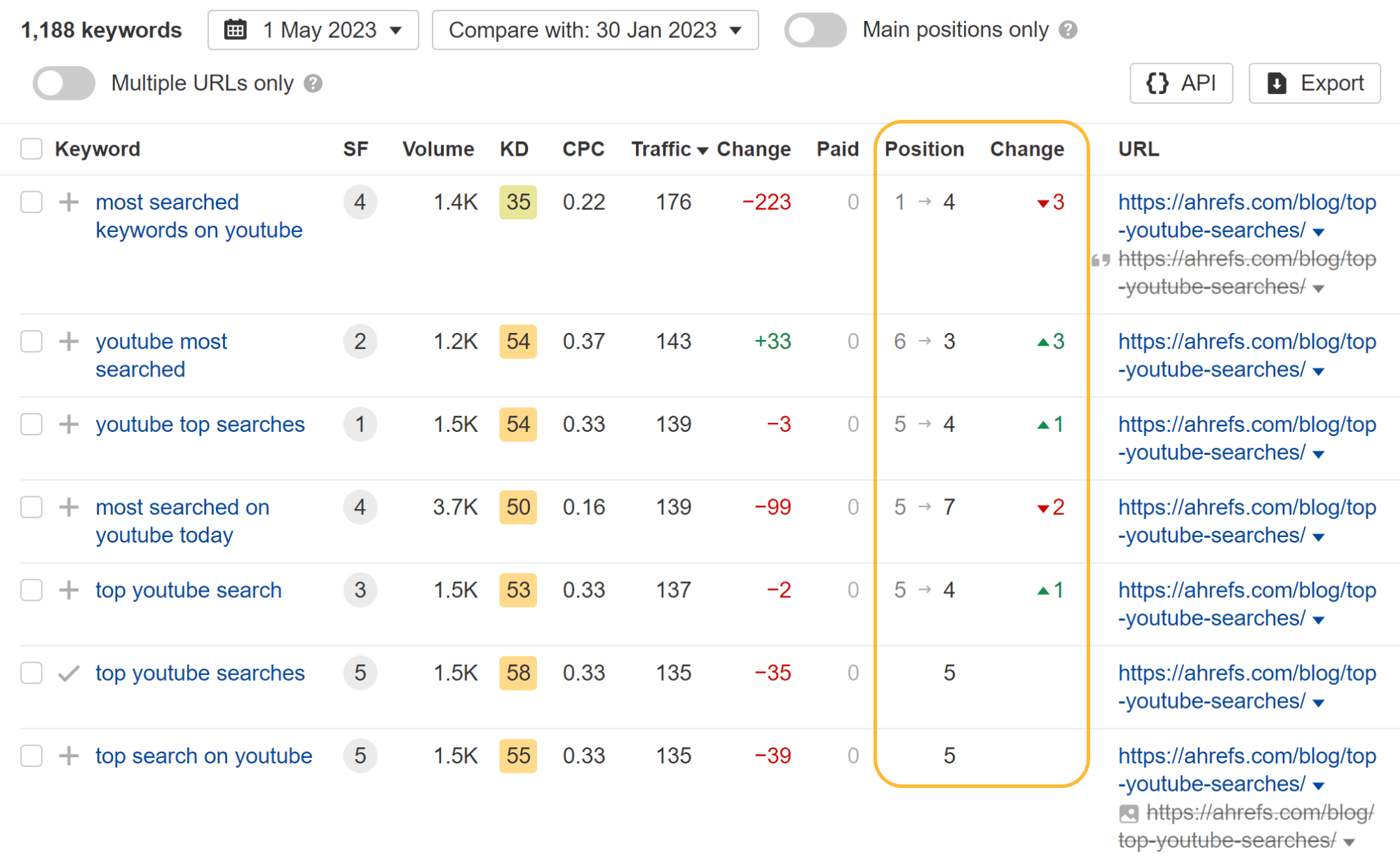

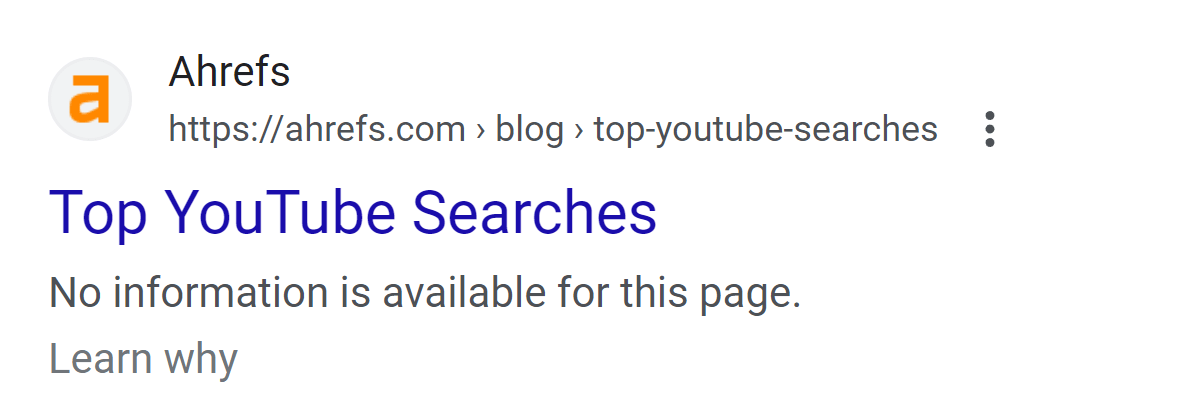

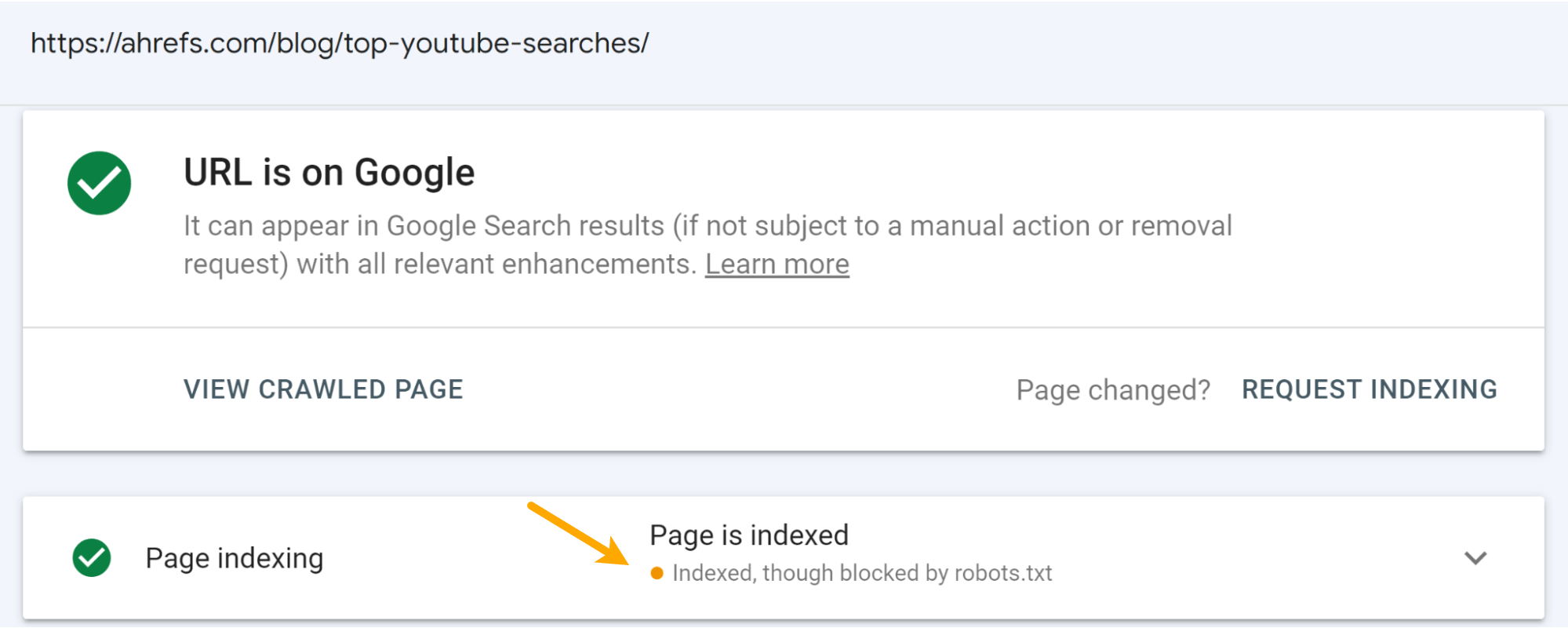

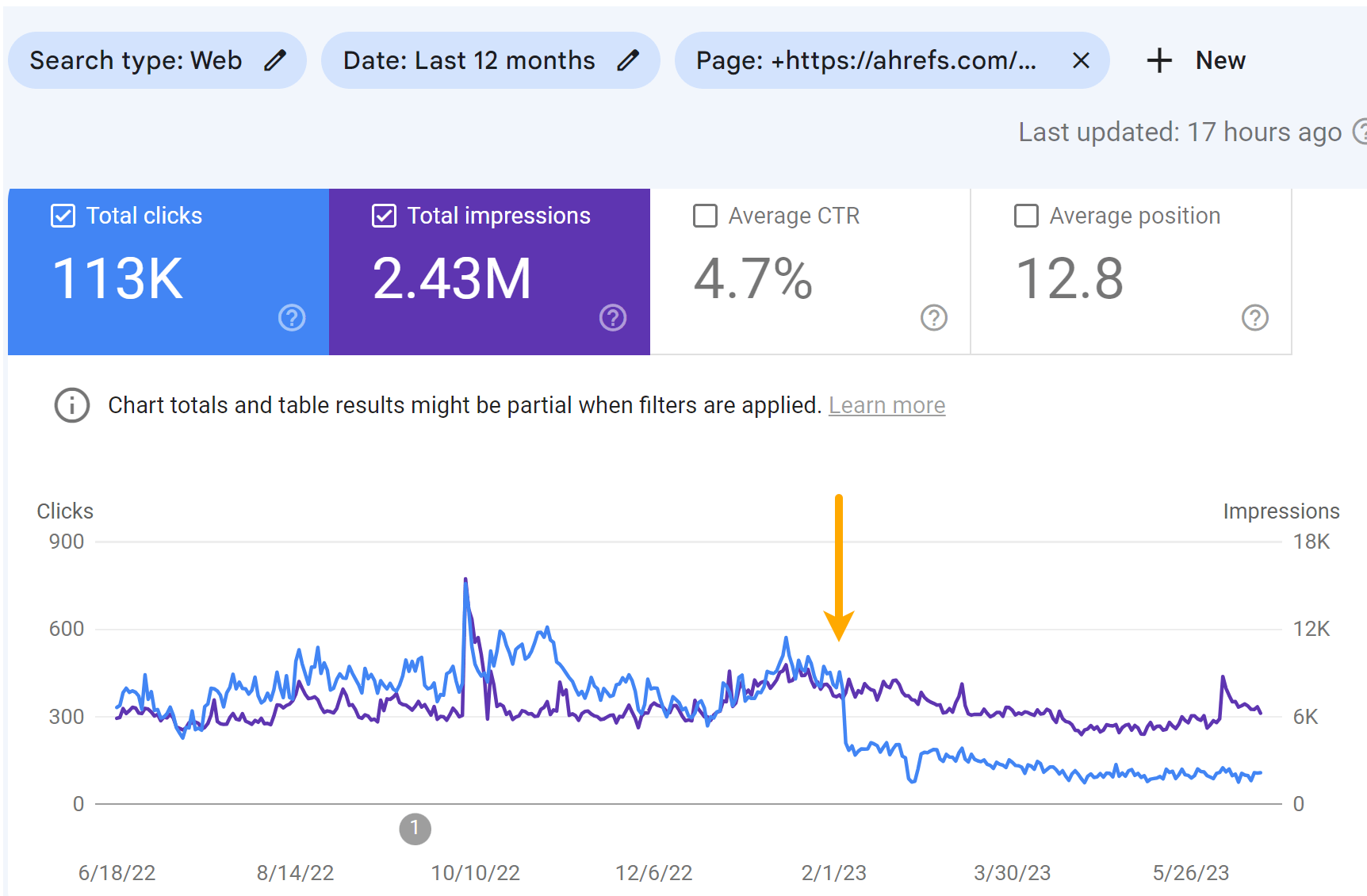

I blocked 2 of our ranking pages utilizing robots.txt. We mislaid a presumption present oregon determination and each of the featured snippets for the pages. I expected a batch much impact, but the satellite didn’t end. Warning I don’t urge doing this, and it’s wholly imaginable that your results whitethorn beryllium antithetic from ours. I was trying to spot the interaction connected rankings and postulation that the removal of contented would have. My mentation was that if we blocked the pages from being crawled, Google would person to trust connected the nexus signals unsocial to fertile the content. However, I don’t deliberation what I saw was really the interaction of removing the content. Maybe it is, but I can’t accidental that with 100% certainty, arsenic the interaction feels excessively small. I’ll beryllium moving different trial to corroborate this. My caller program is to delete the contented from the leafage and spot what happens. My moving mentation is that Google whitethorn inactive beryllium utilizing the contented it utilized to spot connected the leafage to fertile it. Google Search Advocate John Mueller has confirmed this behaviour successful the past. Not really. If we cognize a substance utilized to beryllium connected a page, we mightiness proceed to amusement the leafage adjacent if the substance has been removed. For example, if a institution changes its name, you’d inactive privation to find the website if you searched for the old name. So far, the trial has been moving for astir 5 months. At this point, it doesn’t look similar Google volition halt ranking the page. I suspect, aft a while, it volition apt halt trusting that the contented that was connected the leafage is inactive there, but I haven’t seen grounds of that happening. Keep speechmaking to spot the trial setup and impact. The main takeaway is that accidentally blocking pages (that Google already ranks) from being crawled utilizing robots.txt astir apt isn’t going to person overmuch interaction connected your rankings, and they volition apt inactive amusement successful the hunt results. I chose the aforesaid pages arsenic utilized successful the “impact of link” study, but for the nonfiction connected SEO pricing due to the fact that Joshua Hardwick had conscionable updated it. I had seen the interaction of removing the links to these articles and wanted to trial the interaction of removing the content. As I said successful the intro, I’m not definite that’s really what happened. I blocked these 2 pages connected January 30, 2023: These lines were added to our robots.txt file: As you tin spot successful the charts below, some pages mislaid immoderate traffic. But it didn’t effect successful overmuch alteration to our postulation estimation similar I was expecting. Looking astatine the idiosyncratic keywords, you tin spot that immoderate keywords mislaid a presumption oregon 2 and others really gained ranking positions portion the leafage was blocked from crawling. The astir absorbing happening I noticed is that they mislaid each featured snippets. I conjecture that having the pages blocked from crawling made them ineligible for featured snippets. When I aboriginal removed the block, the nonfiction connected Bing searches rapidly regained immoderate snippets. The astir noticeable interaction to the pages is connected the SERP. The pages mislaid their customized titles and displayed a connection saying that nary accusation was disposable alternatively of the meta description. This was expected. It happens erstwhile a leafage is blocked by robots.txt. Additionally, you’ll spot the “Indexed, though blocked by robots.txt” presumption successful Google Search Console if you inspect the URL. I judge that the connection connected the SERPs wounded the clicks to the pages much than the ranking drops. You tin spot immoderate driblet successful the impressions, but a larger driblet successful the fig of clicks for the articles. Traffic for the “Top YouTube Searches” article: Traffic for the “Top Bing Searches” article: I don’t deliberation immoderate of you volition beryllium amazed by my commentary connected this. Don’t artifact pages you privation indexed. It hurts. Not arsenic atrocious arsenic you mightiness deliberation it does—but it still hurts. Traffic for the “Top YouTube Searches” article.

Traffic for the “Top YouTube Searches” article. Traffic for the “Top Bing Searches” article.

Traffic for the “Top Bing Searches” article. Organic keywords for the “Top Bing Searches” article.

Organic keywords for the “Top Bing Searches” article. Organic keywords for the “Top YouTube Searches” article.

Organic keywords for the “Top YouTube Searches” article.

Final thoughts