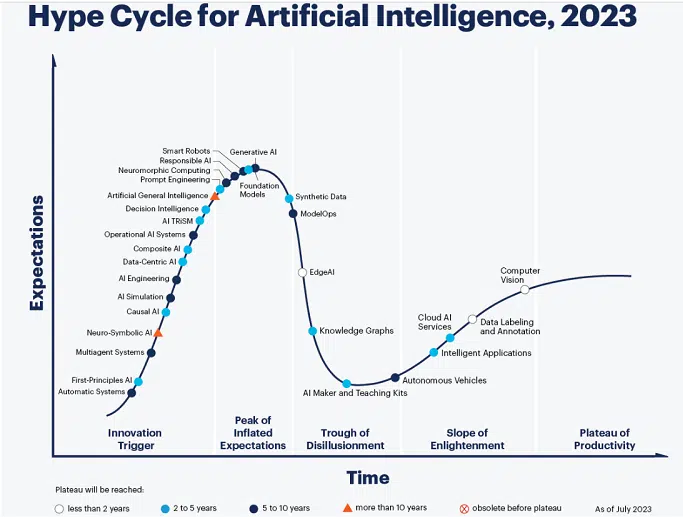

It’s nary astonishment to anyone that generative AI and the foundational models that enactment it are presently to beryllium recovered astatine the precise acme of what Gartner calls “the highest of inflated expectations” successful the latest iteration of the “Gartner Hype Cycle for Artificial Intelligence.” That means they’re teetering connected the precipice that could plummet them into the “trough of disillusionment.”

We spoke with Afraz Jaffri, manager expert astatine Gartner with a absorption connected analytics, information subject and AI astir however we should construe the situation. The interrogation has been edited for magnitude and clarity.

Image courtesy of Gartner.

Image courtesy of Gartner.Q: You’re projecting that it volition instrumentality 2 to 5 years for the instauration models, 5 to 10 years for generative AI to scope the “plateau of productivity.” What is that based on?

A: This is astir wherever we tin spot existent adoption, not conscionable among a prime fig of enterprises, which volition astir apt beryllium done a batch quicker, but amongst each the levels of organizations — predominantly successful the signifier of packaged applications. Every portion of bundle volition person immoderate benignant of generative AI functionality successful it, but the existent productivity gains from those features volition instrumentality longer to beryllium understood. It’s a contention for everyone to vessel a generative AI merchandise oregon diagnostic wrong their software; successful each of those cases, the benefits volition instrumentality longer to travel to fruition and beryllium measured arsenic well.

Foundation models screen a wide spectrum; not conscionable the ample connection models but representation and video models. That’s wherefore the clip to plateau volition beryllium longer. It’s a bucket of each kinds of models.

Dig deeper: Why we attraction astir AI successful marketing

Q: It’s imaginable to ideate things that could beryllium precise disruptive to generative AI. One is regularisation — determination are existent concerns, particularly successful Europe, astir ample connection models scraping idiosyncratic data. The different relates to copyright. Have you taken those kinds of imaginable disruptions into relationship here?

A: Yes, they are portion of the thinking. The archetypal contented is really the spot aspect. Regardless of outer regulations, there’s inactive a cardinal consciousness that it’s precise hard to power the models’ outputs and to warrant the outputs are really correct. That’s a large obstacle. As you mentioned, there’s besides the unreality astir regulations. If successful Europe the models travel nether important regulation, they mightiness not adjacent beryllium available; we person already seen ChatGPT removed determination for immoderate time. If the large enterprises bash spot that it’s excessively overmuch occupation to comply, they whitethorn simply retreat their services from that region.

There’s besides the ineligible side. These models are based on, arsenic you said, information that includes copyrighted information scraped from the web. If the providers of that information commencement to inquire for due redemption, that has an interaction connected the aboriginal level of usage of these models arsenic well. Then there’s the information side. How unafraid tin you marque these models against things similar attacks. Definitely immoderate headwinds present to navigate.

Q: We perceive a batch astir the “human-in-the-loop.” Before releasing thing created by generative AI to an audience, you request to person quality reappraisal and approval. But 1 of the benefits of genAI is the velocity and standard of its creativity. How tin humans support up?

A: The velocity and the standard is determination to beryllium utilized by humans doing the things they request to do. It’s determination to assistance radical who, say, request to spell done 10 documents to get an reply to something; present they tin bash it successful 1 minute. Because of the spot issue, those are the astir invaluable types of tasks to usage a connection exemplary for.

Q: If liable AI is 5 to 10 years retired from the plateau, looks similar you’re predicting a bumpy ride.

A: The regulatory satellite and different systems are unknown; and adjacent erstwhile they bash go formalized and known determination volition beryllium antithetic regulations for antithetic geographies. The innate quality of these models is that they bash person a inclination to not beryllium safe. Being capable to power that is going to instrumentality clip to learn. How bash you cheque that a exemplary is going to beryllium safe? How bash you audit a exemplary for compliance? For security? Best practices are hard to travel by; each enactment is taking its ain approach. Forget astir generative AI, different AI models, those that person been utilized by organizations for immoderate time, are inactive making mistakes, are inactive exhibiting biases.

Q: How should radical hole for the imminent trough of disillusionment?

A: Organizations volition travel antithetic trajectories successful their acquisition of generative AI, truthful it doesn’t needfully mean an enactment needs to autumn into the trough. It mostly happens erstwhile expectations are not managed. If you commencement retired by looking astatine immoderate targeted usage cases, immoderate targeted pieces of implementation, and you person bully metrics for success, and besides investments successful information absorption and organization; bully governance, bully policies; if you harvester each that with a applicable communicative astir what the models tin do, past you’ve controlled the hype and you’re little apt to autumn into the trough.

Q: Would you accidental the AI hype rhythm is moving faster than others you’ve looked at?

A: The AI hype rhythm does thin to person a skew towards innovations that bash determination quicker crossed the curve — and they thin to beryllium much impactful successful what they tin bash arsenic well. At the moment, it’s beforehand and halfway for backing initiatives, for VCs. It’s specified a focal area, successful the probe abstraction arsenic well. A batch of these things travel retired of academia, which is precise progressive successful this space.

Q: Finally, AGI, oregon artifical wide quality (AI that replicates quality intelligence). You person that arsenic coming successful 10 years oregon more. Are you hedging your bets due to the fact that it mightiness not beryllium imaginable astatine all?

A: Yes. We bash person a marker which is “obsolete earlier plateau.” There is an statement to accidental it’s ne'er really going to happen, but we’re saying it’s greater than 10 years due to the fact that determination are truthful galore antithetic interpretations of what AGI really is. Lots of respected researchers are saying we’re connected the way that volition get america to AGI, but galore much breakthroughs and innovations are needed to spot what the way really looks like. We deliberation it’s thing further distant than galore radical believe.

Dig deeper: Discover cutting-edge martech solutions for escaped – online adjacent week!

Get MarTech! Daily. Free. In your inbox.