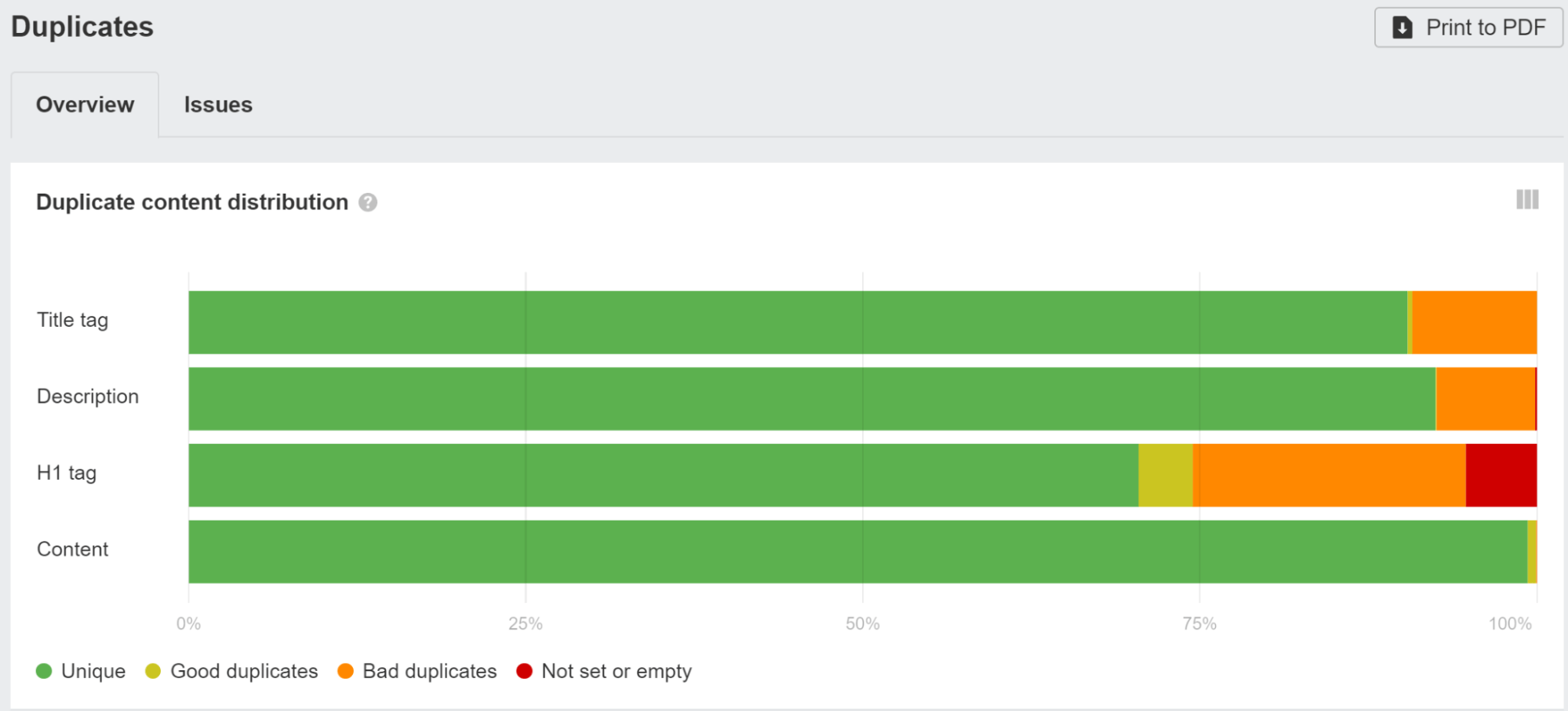

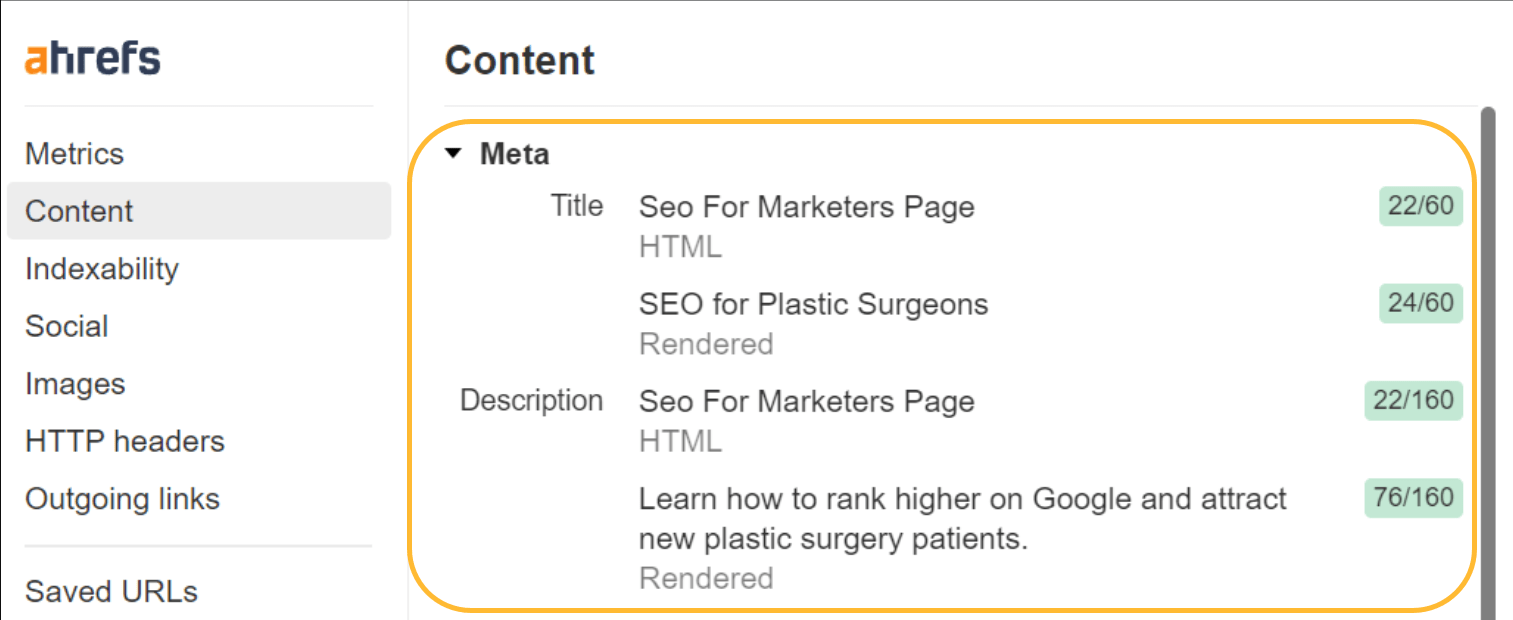

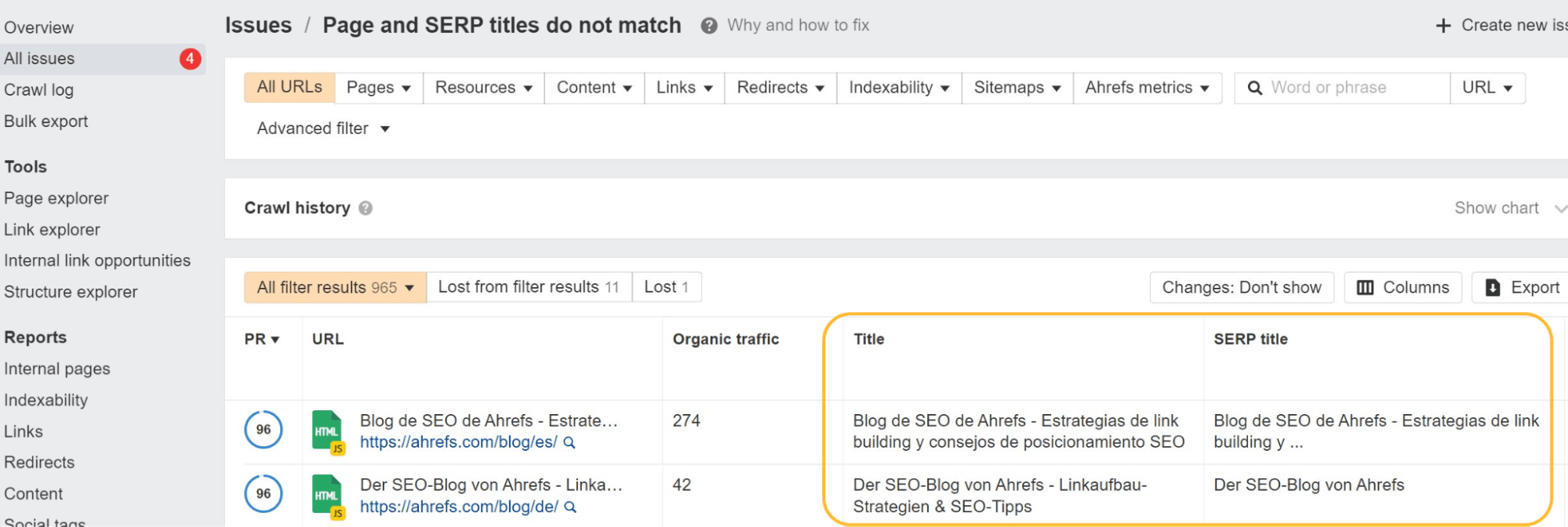

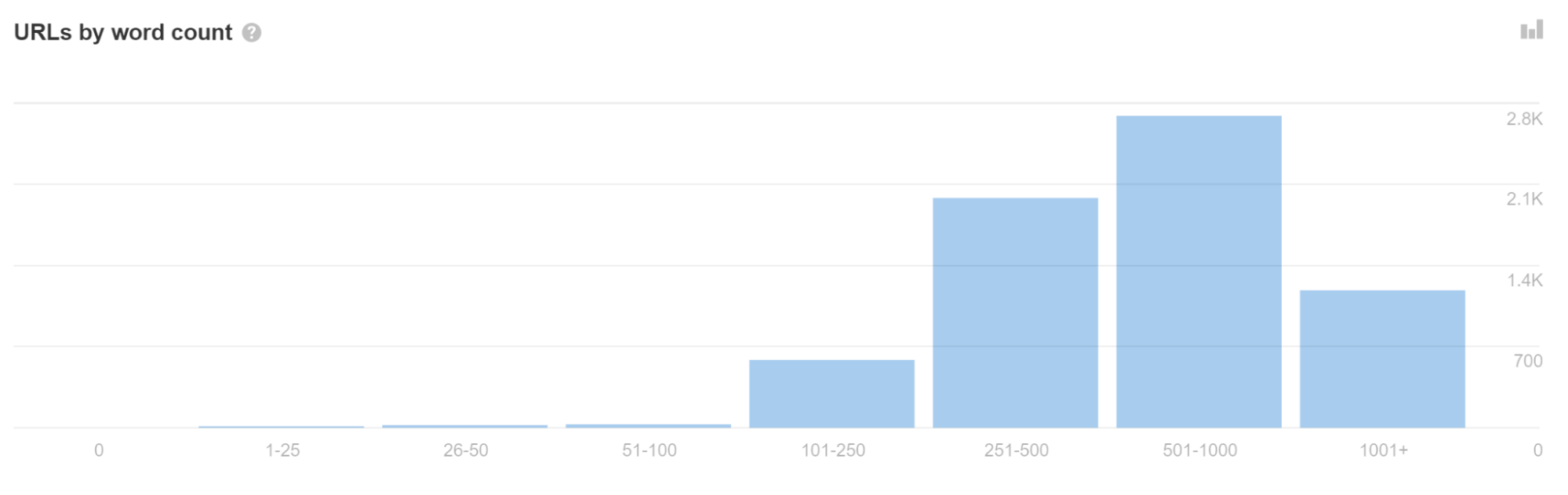

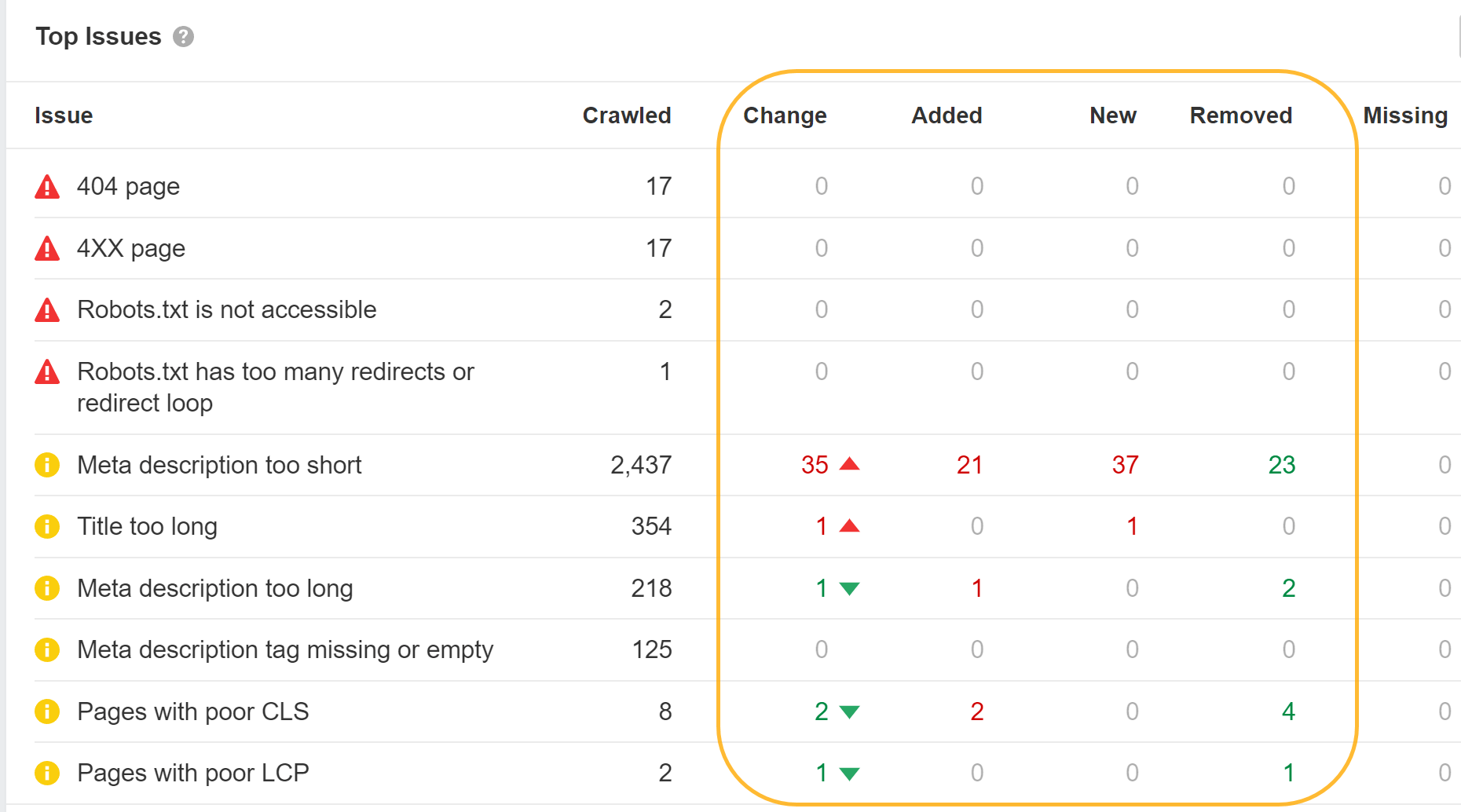

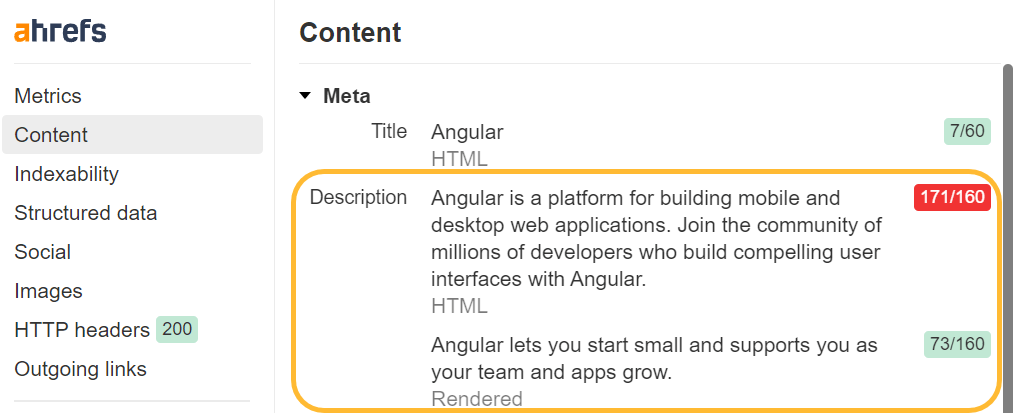

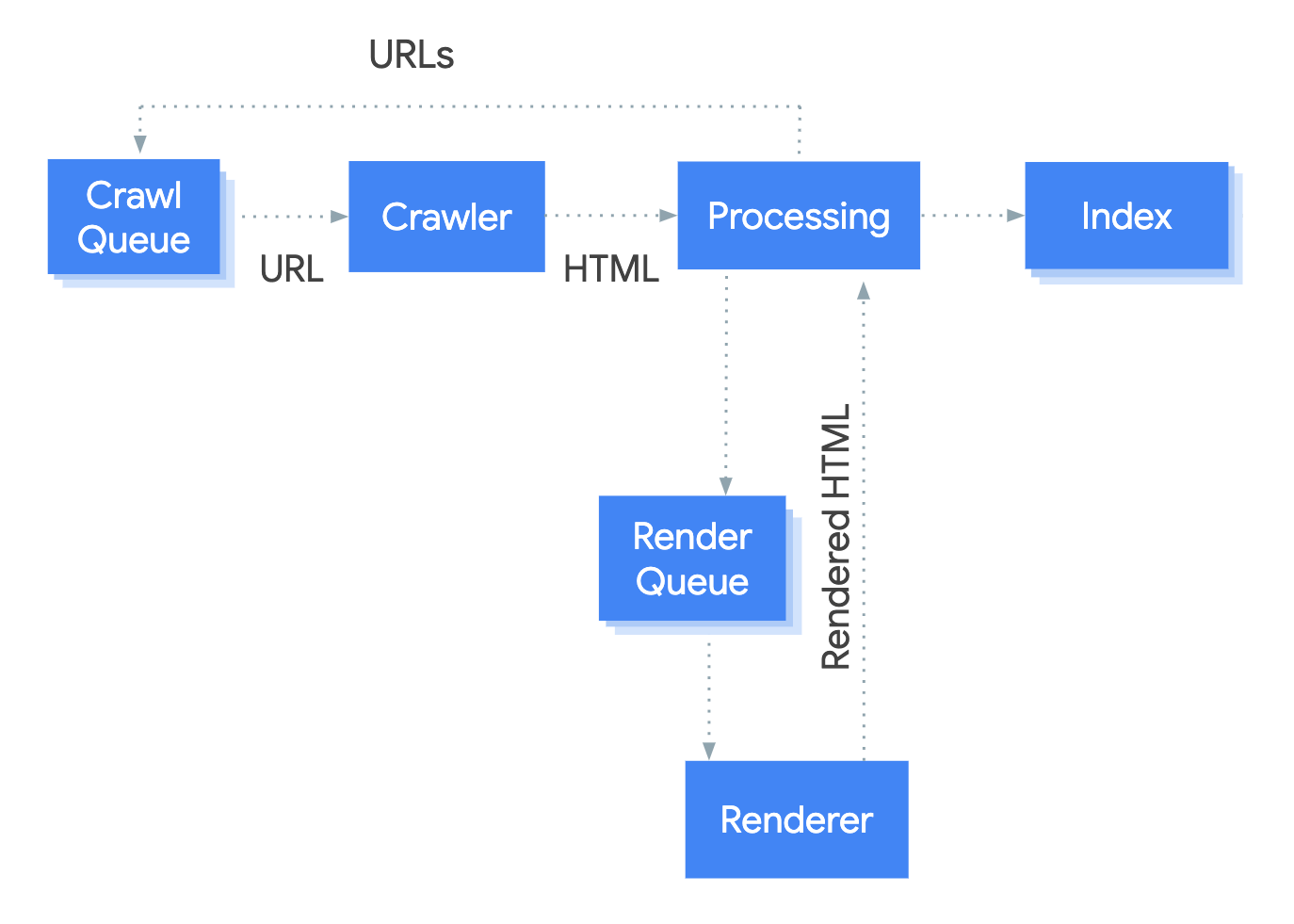

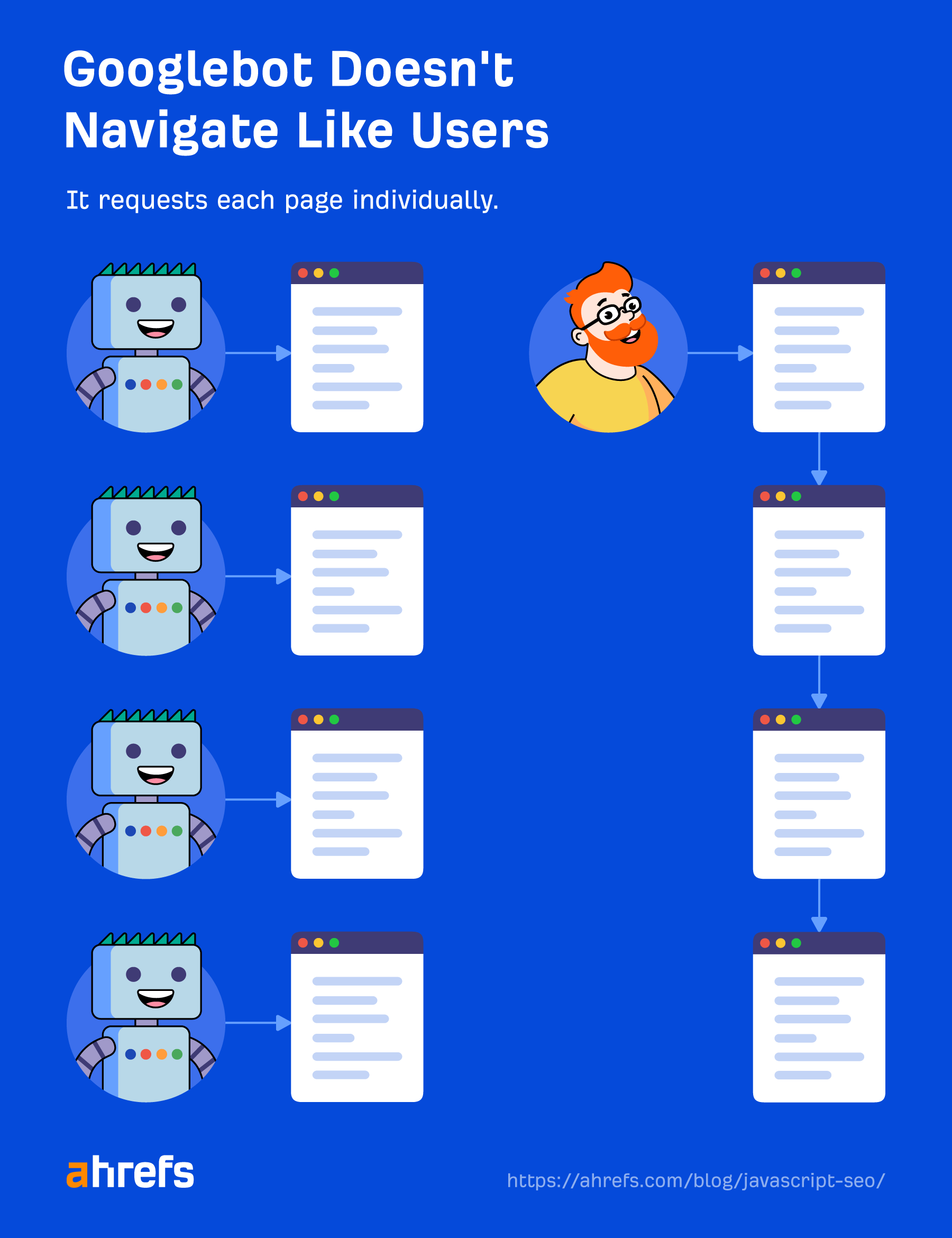

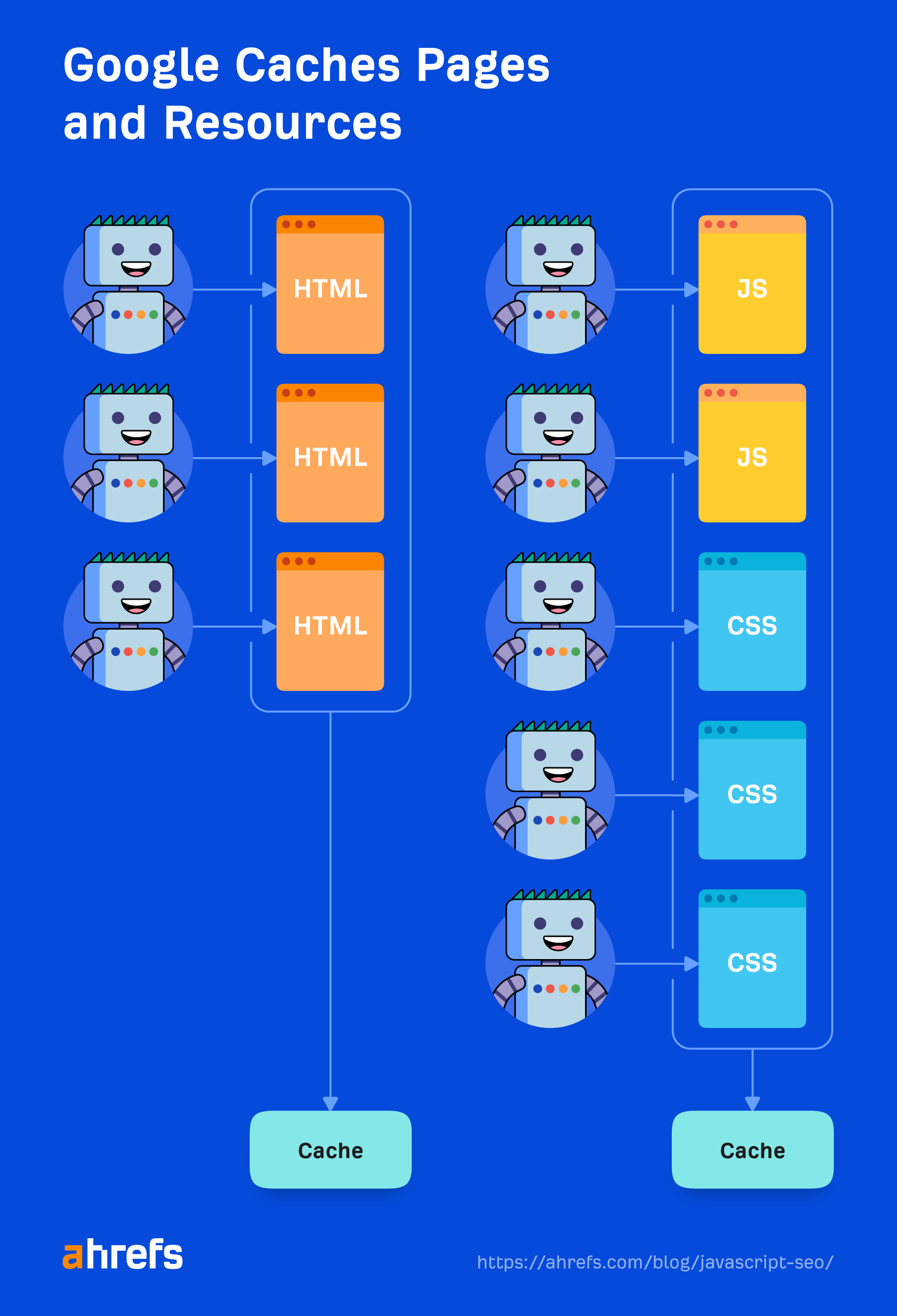

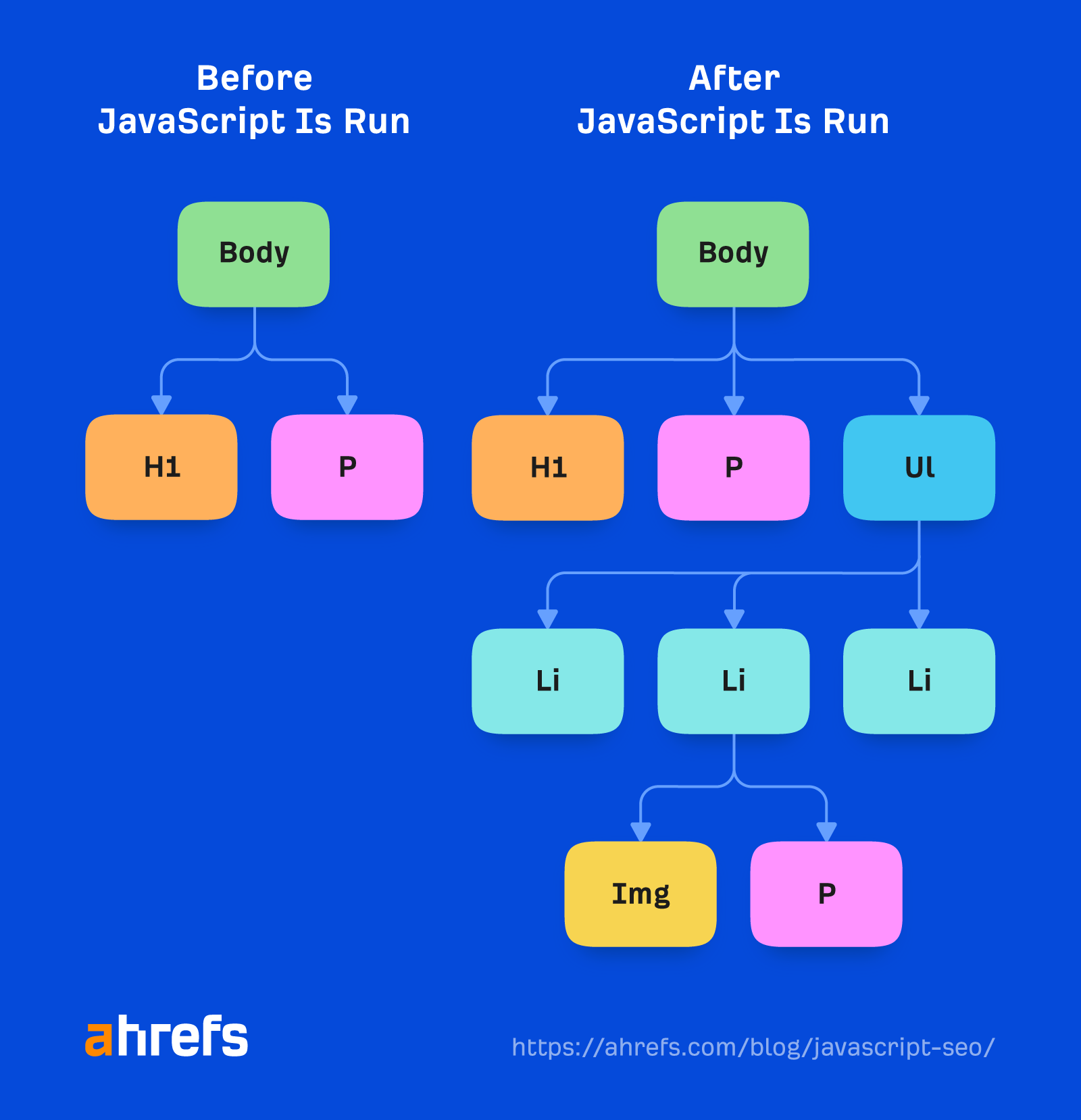

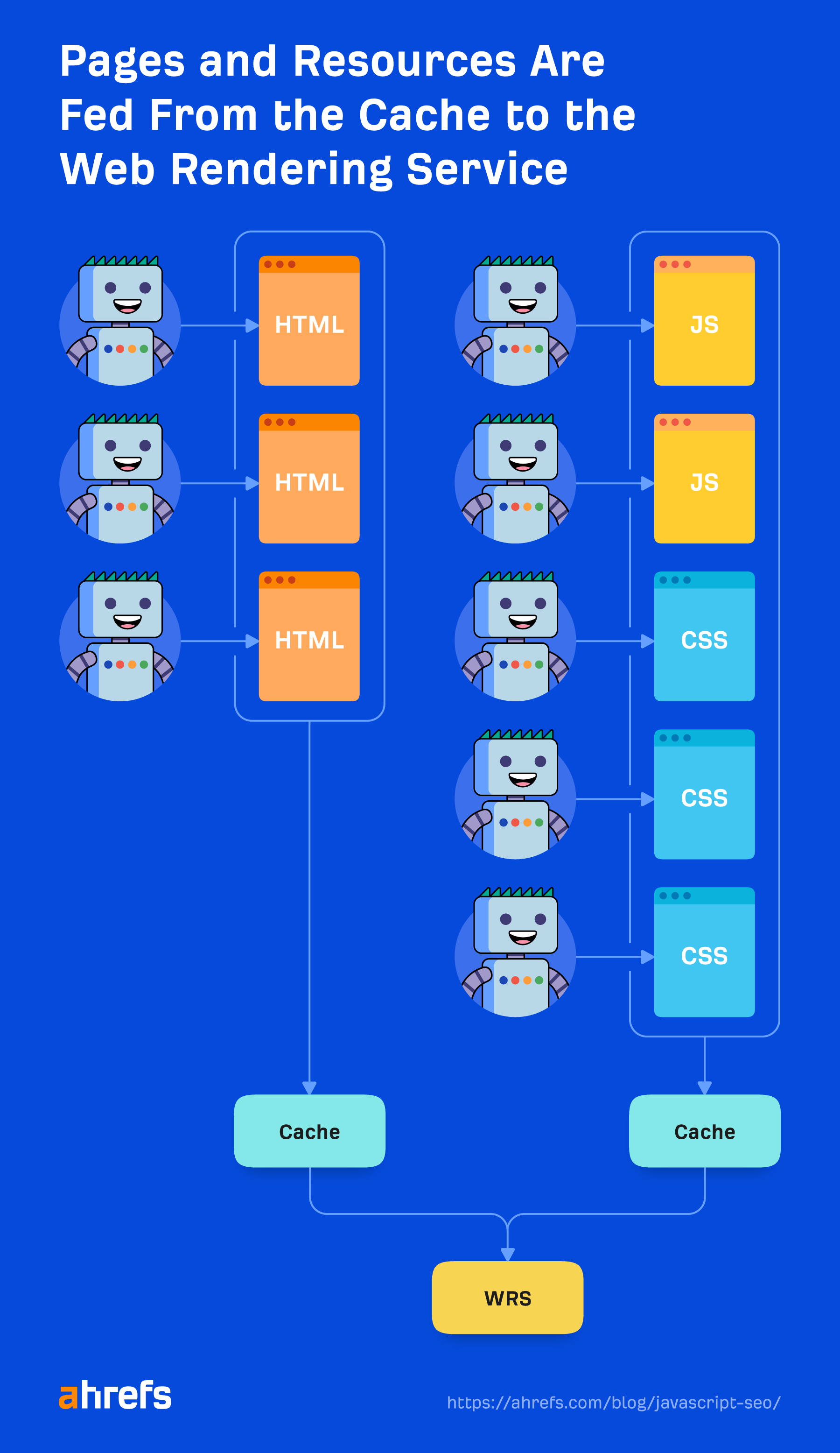

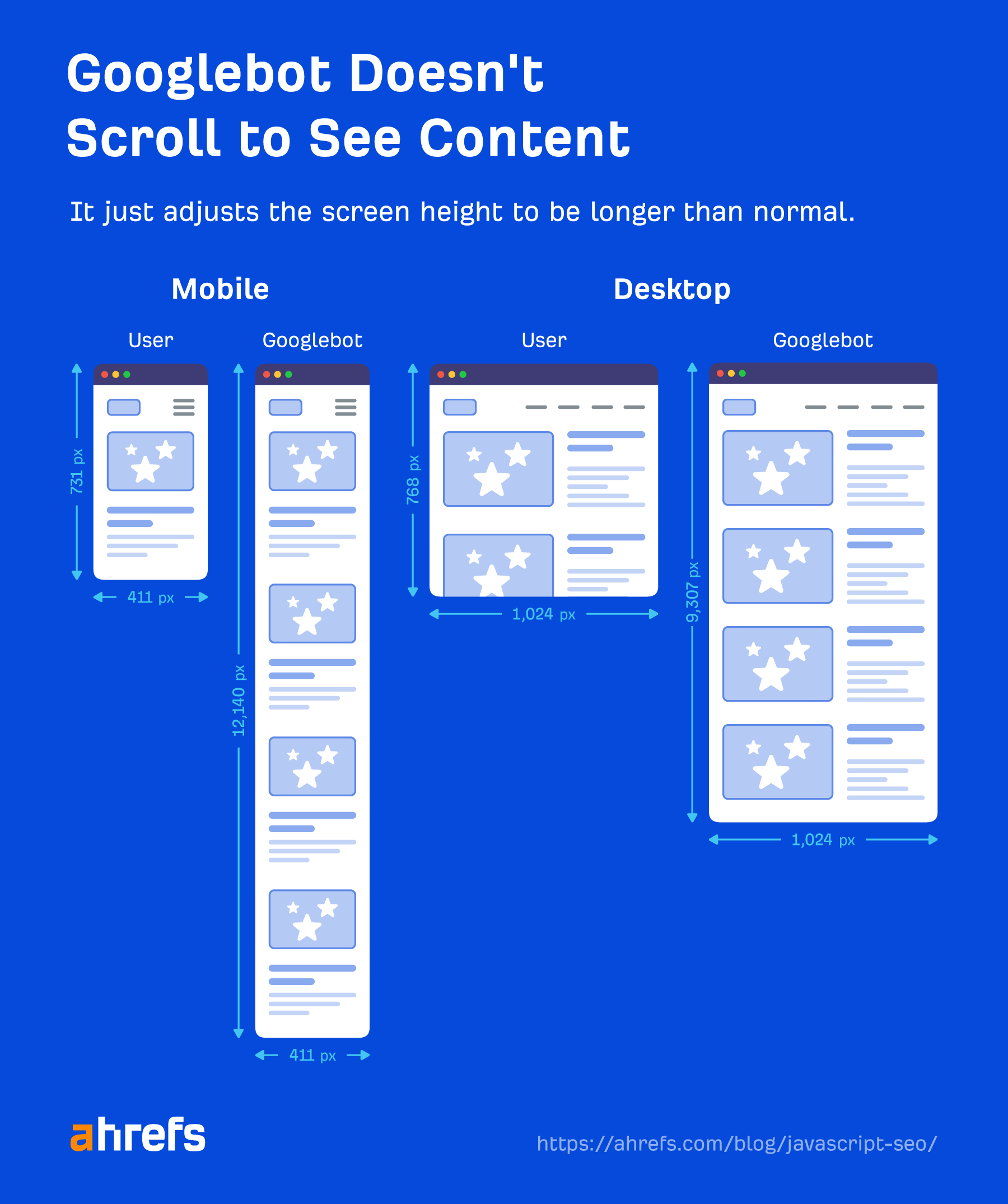

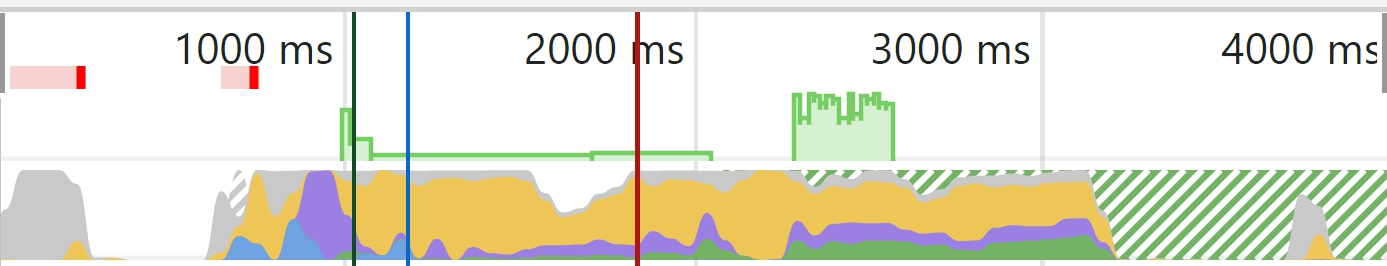

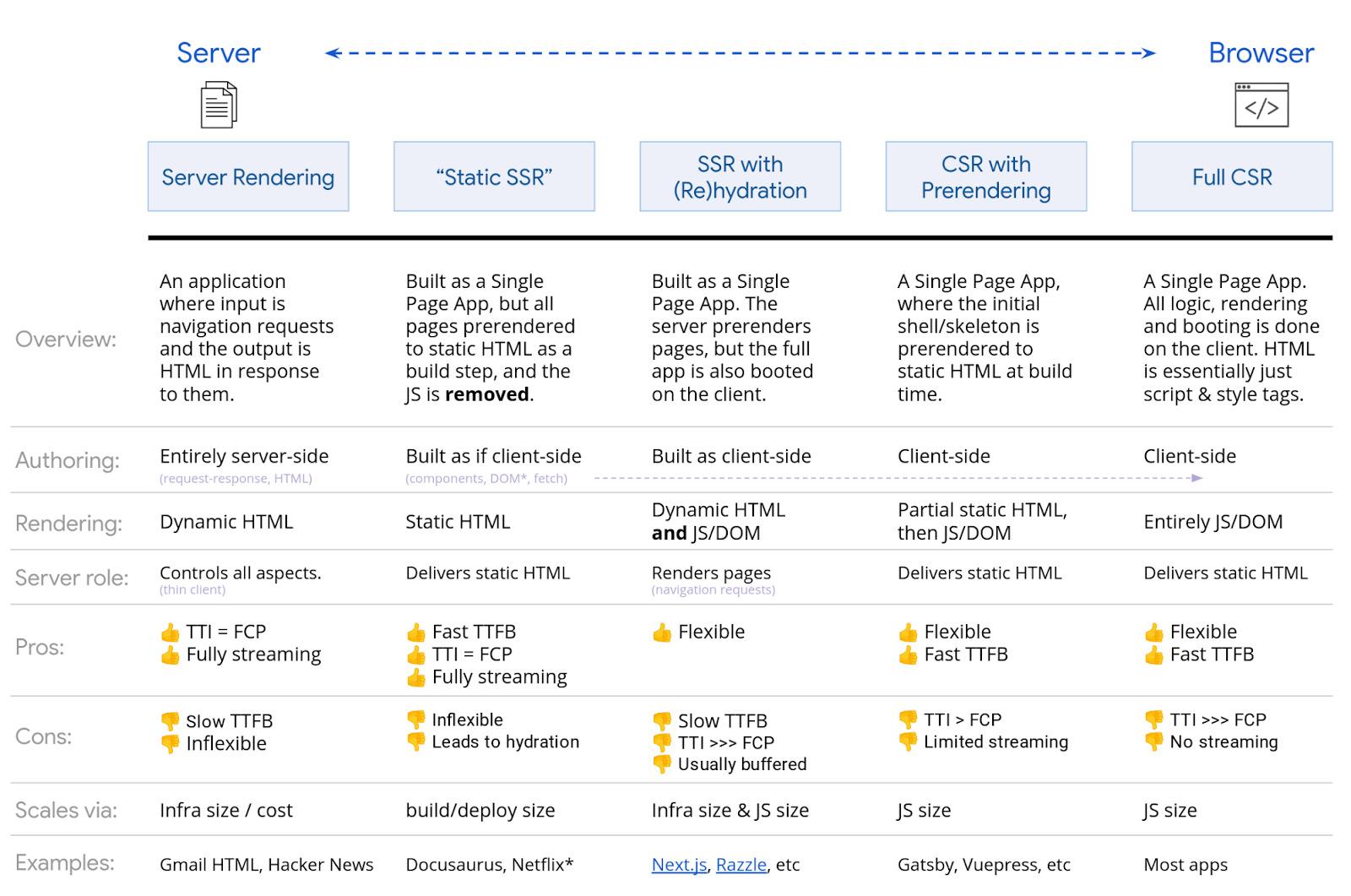

Did you cognize that portion the Ahrefs Blog is powered by WordPress, overmuch of the remainder of the tract is powered by JavaScript like React? The world of the existent web is that JavaScript is everywhere. Most websites usage immoderate benignant of JavaScript to adhd interactivity and amended idiosyncratic experience. Yet astir of the JavaScript utilized connected truthful galore websites won’t interaction SEO astatine all. If you person a mean WordPress instal without a batch of customization, past apt nary of the issues volition use to you. Where you volition tally into issues is erstwhile JavaScript is utilized to physique an full page, adhd oregon instrumentality distant elements, oregon alteration what was already connected the page. Some sites usage it for menus, pulling successful products oregon prices, grabbing contented from aggregate sources or, successful immoderate cases, for everything connected the site. If this sounds similar your site, support reading. We’re seeing full systems and apps built with JavaScript frameworks and adjacent immoderate accepted CMSes with a JavaScript flair wherever they’re headless oregon decoupled. The CMS is utilized arsenic the backend root of data, but the frontend presumption is handled by JavaScript. The web has moved from plain HTML - arsenic an SEO you tin clasp that. Learn from JS devs & stock SEO cognition with them. JS’s not going away. I’m not saying that SEOs request to spell retired and larn however to programme JavaScript. I really don’t urge it due to the fact that it’s not apt that you volition ever interaction the code. What SEOs request to cognize is however Google handles JavaScript and however to troubleshoot issues. New to method SEO? Check out our JavaScript SEO is simply a portion of method SEO (search motor optimization) that makes JavaScript-heavy websites casual to crawl and index, arsenic good arsenic search-friendly. The extremity is to person these websites beryllium recovered and fertile higher successful hunt engines. JavaScript is not atrocious for SEO, and it’s not evil. It’s conscionable antithetic from what galore SEOs are utilized to, and there’s a spot of a learning curve. A batch of the processes are akin to things SEOs are already utilized to seeing, but determination whitethorn beryllium flimsy differences. You’re inactive going to beryllium looking astatine mostly HTML code, not really looking astatine JavaScript. All the mean on-page SEO champion practices inactive apply. See our usher connected on-page SEO. You’ll adjacent find acquainted plugin-type options to grip a batch of the basal SEO elements, if it’s not already built into the model you’re using. For JavaScript frameworks, these are called modules, and you’ll find tons of bundle options to instal them. There are versions for galore of the fashionable frameworks similar React, Vue, Angular, and Svelte that you tin find by searching for the model + module sanction similar “React Helmet.” Meta tags, Helmet, and Head are each fashionable modules with akin functionality and let for galore of the fashionable tags needed for SEO to be set. In immoderate ways, JavaScript is amended than accepted HTML, specified arsenic easiness of gathering and performance. In immoderate ways, JavaScript is worse, specified arsenic it can’t beryllium parsed progressively (like HTML and CSS tin be), and it tin beryllium dense connected leafage load and performance. Often, you whitethorn beryllium trading show for functionality. JavaScript isn’t perfect, and it isn’t ever the close instrumentality for the job. Developers bash overuse it for things wherever there’s astir apt a amended solution. But sometimes, you person to enactment with what you are given. These are galore of the communal SEO issues you whitethorn tally into erstwhile moving with JavaScript sites. You’re inactive going to privation to person unsocial title tags and meta descriptions crossed your pages. Because a batch of the JavaScript frameworks are templatized, you tin easy extremity up successful a concern wherever the aforesaid rubric oregon meta statement is utilized for each pages oregon a radical of pages. Check the Duplicates study successful Ahrefs’ Site Audit and click into immoderate of the groupings to spot much information astir the issues we found. You tin usage 1 of the SEO modules similar Helmet to acceptable customized tags for each page. JavaScript tin besides beryllium utilized to overwrite default values you whitethorn person set. Google volition process this and usage the overwritten rubric oregon description. For users, however, titles tin beryllium problematic, arsenic 1 rubric whitethorn look successful the browser and they’ll announcement a flash erstwhile it gets overwritten. If you spot the rubric flashing, you tin usage Ahrefs’ SEO Toolbar to spot some the earthy HTML and rendered versions. Google whitethorn not usage your titles oregon meta descriptions anyway. As I mentioned, the titles are worthy cleaning up for users. Fixing this for meta descriptions won’t truly marque a difference, though. When we studied Google’s rewriting, we recovered that Google overwrites titles 33.4% of the time and meta descriptions 62.78% of the time. In Site Audit, we’ll adjacent amusement you which of your rubric tags Google has changed. For years, Google said it didn’t respect canonical tags inserted with JavaScript. It yet added an objection to the documentation for cases wherever determination wasn’t already a tag. I caused that change. I ran tests to amusement this worked erstwhile Google was telling everyone it didn’t. What does the URL Inspection instrumentality accidental astir JavaScript inserted canonicals? Here’s 1 for https://t.co/rZDpYgwK8r (no canonical successful html), which was decidedly respected and switched successful the SERPs a portion backmost but present adjacent the tools are telling maine they spot and respect it. @JohnMu pic.twitter.com/vmml2IG7bk If determination was already a canonical tag contiguous and you adhd different 1 oregon overwrite the existing 1 with JavaScript, past you’re giving them 2 canonical tags. In this case, Google has to fig retired which 1 to usage oregon disregard the canonical tags successful favour of different canonicalization signals. Standard SEO proposal of “every leafage should person a self-referencing canonical tag” gets galore SEOs successful trouble. A dev takes that requirement, and they marque pages with and without a trailing slash self-canonical. example.com/page with a canonical of example.com/page and example.com/page/ with a canonical of example.com/page/. Oops, that’s wrong! You astir apt privation to redirect 1 of those versions to the other. The aforesaid happening tin hap with parameterized versions that you whitethorn privation to combine, but each is self-referencing. With meta robots tags, Google is ever going to instrumentality the astir restrictive enactment it sees—no substance the location. If you person an scale tag successful the earthy HTML and noindex tag successful the rendered HTML, Google volition dainty it arsenic noindex. If you person a noindex tag successful the earthy HTML but you overwrite it with an scale tag utilizing JavaScript, it’s inactive going to dainty that leafage arsenic noindex. It works the aforesaid for nofollow tags. Google is going to instrumentality the astir restrictive option. Missing alt attributes are an accessibility issue, which whitethorn crook into a ineligible issue. Most large companies person been sued for ADA compliance issues connected their websites, and immoderate get sued aggregate times a year. I’d hole this for the main contented images, but not for things similar placeholder oregon decorative images wherever you tin permission the alt attributes blank. For web search, the substance successful alt attributes counts arsenic substance connected the page, but that’s truly the lone relation it plays. Its value is often overstated for SEO, successful my opinion. However, it does assistance with representation hunt and representation rankings. Lots of JavaScript developers permission alt attributes blank, truthful double-check that yours are there. Look astatine the Images study successful Site Audit to find these. Don’t artifact entree to resources if they are needed to physique portion of the leafage oregon adhd to the content. Google needs to entree and download resources truthful that it tin render the pages properly. In your robots.txt, the easiest mode to let the needed resources to beryllium crawled is to add: User-Agent: Googlebot Also cheque the robots.txt files for immoderate subdomains oregon further domains you whitethorn beryllium making requests from, specified arsenic those for your API calls. If you person blocked resources with robots.txt, you tin cheque if it impacts the leafage contented utilizing the artifact options successful the “Network” tab successful Chrome Dev Tools. Select the record and artifact it, past reload the leafage to spot if immoderate changes were made. Many pages with JavaScript functionality whitethorn not beryllium showing each of the contented to Google by default. If you speech to your developers, they whitethorn notation to this arsenic being not Document Object Model (DOM) loaded. This means the contented wasn’t loaded by default and mightiness beryllium loaded aboriginal with an enactment similar a click. A speedy cheque you tin bash is to simply hunt for a snippet of your contented successful Google wrong quotation marks. Search for “some operation from your content” and spot if the leafage is returned successful the hunt results. If it is, past your contented was apt seen. Sidenote. Content that is hidden by default whitethorn not beryllium shown wrong your snippet connected the SERPs. It’s particularly important to cheque your mobile version, arsenic this is often stripped down for idiosyncratic experience. You tin besides right-click and usage the “Inspect” option. Search for the substance wrong the “Elements” tab. The champion cheque is going to beryllium searching wrong the contented of 1 of Google’s investigating tools similar the URL Inspection instrumentality successful Google Search Console. I’ll speech much astir this later. I’d decidedly cheque thing down an accordion oregon a dropdown. Often, these elements marque requests that load contented into the leafage erstwhile they are clicked on. Google doesn’t click, truthful it doesn’t spot the content. If you usage the inspect method to hunt content, marque definite to transcript the contented and past reload the leafage oregon unfastened it successful an incognito model earlier searching. If you’ve clicked the constituent and the contented loaded successful erstwhile that enactment was taken, you’ll find the content. You whitethorn not spot the aforesaid effect with a caller load of the page. With JavaScript, determination whitethorn beryllium respective URLs for the aforesaid content, which leads to duplicate content issues. This whitethorn beryllium caused by capitalization, trailing slashes, IDs, parameters with IDs, etc. So each of these may exist: domain.com/Abc If you lone privation 1 mentation indexed, you should acceptable a self-referencing canonical and either canonical tags from different versions that notation the main mentation oregon ideally redirect the different versions to the main version. Check the Duplicates study successful Site Audit. We interruption down which duplicate clusters person canonical tags acceptable and which person issues. A communal contented with JavaScript frameworks is that pages tin beryllium with and without the trailing slash. Ideally, you’d prime the mentation you similar and marque definite that mentation has a self-referencing canonical tag and past redirect the different mentation to your preferred version. With app ammunition models, precise small contented and codification whitethorn beryllium shown successful the archetypal HTML response. In fact, each leafage connected the tract whitethorn show the aforesaid code, and this codification whitethorn beryllium the nonstop aforesaid arsenic the codification connected immoderate different websites. If you spot a batch of URLs with a debased connection number successful Site Audit, it whitethorn bespeak you person this issue. This tin sometimes origin pages to beryllium treated arsenic duplicates and not instantly spell to rendering. Even worse, the incorrect leafage oregon adjacent the incorrect tract whitethorn amusement successful hunt results. This should resoluteness itself implicit clip but tin beryllium problematic, particularly with newer websites. # already has a defined functionality for browsers. It links to different portion of a leafage erstwhile clicked—like our “table of contents” diagnostic connected the blog. Servers mostly won’t process thing aft a #. So for a URL similar abc.com/#something, thing aft a # is typically ignored. JavaScript developers person decided they privation to usage # arsenic the trigger for antithetic purposes, and that causes confusion. The astir communal ways they’re misused are for routing and for URL parameters. Yes, they work. No, you shouldn’t do it. JavaScript frameworks typically person routers that representation what they telephone routes (paths) to clean URLs. A batch of JavaScript developers usage hashes (#) for routing. This is particularly a occupation for Vue and immoderate of the earlier versions of Angular. To hole this for Vue, you tin enactment with your developer to alteration the following: Vue router: const router = caller VueRouter ({ There’s a increasing inclination wherever radical are utilizing # alternatively of ? arsenic the fragment identifier, particularly for passive URL parameters similar those utilized for tracking. I thin to urge against it due to the fact that of each of the disorder and issues. Situationally, I mightiness beryllium OK with it getting escaped of a batch of unnecessary parameters. The router options that let for cleanable URLs usually person an further module that tin besides make sitemaps. You tin find them by searching for your strategy + router sitemap, specified arsenic “Vue router sitemap.” Many of the rendering solutions whitethorn besides person sitemap options. Again, conscionable find the strategy you usage and Google the strategy + sitemap specified arsenic “Gatsby sitemap,” and you’re definite to find a solution that already exists. Because JavaScript frameworks aren’t server-side, they can’t truly propulsion a server mistake similar a 404. You person a mates of antithetic options for mistake pages, such as: SEOs are utilized to 301/302 redirects, which are server-side. JavaScript is typically tally client-side. Server-side redirects and adjacent meta refresh redirects volition beryllium easier for Google to process than JavaScript redirects since it won’t person to render the leafage to see them. JavaScript redirects volition inactive beryllium seen and processed during rendering and should beryllium OK successful astir cases—they’re conscionable not arsenic perfect arsenic different redirect types. They are treated arsenic imperishable redirects and inactive walk each signals like PageRank. You tin often find these redirects successful the codification by looking for “window.location.href”. The redirects could perchance beryllium successful the config record arsenic well. In the Next.js config, there’s a redirect relation you tin usage to acceptable redirects. In different systems, you whitethorn find them successful the router. There are usually a fewer module options for antithetic frameworks that enactment immoderate features needed for internationalization like hreflang. They’ve commonly been ported to the antithetic systems and see i18n, intl or, galore times, the aforesaid modules utilized for header tags similar Helmet tin beryllium utilized to adhd the needed tags. We emblem hreflang issues successful the Localization study successful Site Audit. We besides ran a survey and recovered that 67% of domains utilizing hreflang person issues. You besides request to beryllium wary if your tract is blocking oregon treating visitors from a circumstantial state oregon utilizing a peculiar IP successful antithetic ways. This tin origin your contented not to beryllium seen by Googlebot. If you person logic redirecting users, you whitethorn privation to exclude bots from this logic. We’ll fto you cognize if this is happening erstwhile mounting up a task successful Site Audit. JavaScript tin beryllium utilized to make oregon to inject structured information connected your pages. It’s beauteous communal to bash this with JSON-LD and not apt to origin immoderate issues, but tally immoderate tests to marque definite everything comes retired similar you expect. We’ll emblem immoderate structured information we spot successful the Issues study successful Site Audit. Look for the “Structured information has schema.org validation” error. We’ll archer you precisely what is incorrect for each page. Links to different pages should beryllium successful the web modular format. Internal and outer links request to beryllium an <a> tag with an href attribute. There are galore ways you tin marque links enactment for users with JavaScript that are not search-friendly. Good: <a href=”/page”>simple is good</a> <a href=”/page” onclick=”goTo(‘page’)”>still okay</a> Bad: <a onclick=”goTo(‘page’)”>nope, nary href</a> <a href=”javascript:goTo(‘page’)”>nope, missing link</a> <a href=”javascript:void(0)”>nope, missing link</a> <span onclick=”goTo(‘page’)”>not the close HTML element</span> <option value="page">nope, incorrect HTML element</option> <a href=”#”>no link</a> Button, ng-click, determination are galore much ways this tin beryllium done incorrectly. In my experience, Google inactive processes galore of the atrocious links and crawls them, but I’m not definite however it treats them arsenic acold passing signals similar PageRank. The web is simply a messy place, and Google’s parsers are often reasonably forgiving. It’s besides worthy noting that interior links added with JavaScript volition not get picked up until aft rendering. That should beryllium comparatively speedy and not a origin for interest successful most cases. Google heavy caches each resources connected its end. I’ll speech astir this a spot much later, but you should cognize that its strategy tin pb to immoderate intolerable states being indexed. This is simply a quirk of its systems. In these cases, erstwhile record versions are utilized successful the rendering process, and the indexed mentation of a leafage whitethorn incorporate parts of older files. You tin usage record versioning oregon fingerprinting (file.12345.js) to make caller record names erstwhile important changes are made truthful that Google has to download the updated mentation of the assets for rendering. You whitethorn request to alteration your user-agent to decently diagnose immoderate issues. Content tin beryllium rendered otherwise for antithetic user-agents oregon adjacent IPs. You should cheque what Google really sees with its investigating tools, and I’ll screen those successful a bit. You tin acceptable a customized user-agent with Chrome DevTools to troubleshoot sites that prerender based connected circumstantial user-agents, oregon you tin easy bash this with our toolbar as well. There tin beryllium features utilized by developers that Googlebot doesn’t support. Your developers tin use diagnostic detection. And if there’s a missing feature, they tin take to either skip that functionality oregon usage a fallback method with a polyfill to spot if they tin marque it work. This is mostly an FYI for SEOs. If you spot thing you deliberation Google should beryllium seeing and it’s not seeing it, it could beryllium due to the fact that of the implementation. Since I primitively wrote this, lazy loading has mostly moved from being JavaScript-driven to being handled by browsers. You whitethorn inactive tally into immoderate JavaScript-driven lazy load setups. For the astir part, they’re astir apt good if the lazy loading is for images. The main happening I’d cheque is to spot if contented is being lazy loaded. Refer backmost to the “Check if Google sees your content” conception above. These kinds of setups person caused problems with the contented being picked up correctly. If you person an infinite scroll setup, I inactive urge a paginated leafage mentation truthful that Google tin inactive crawl properly. Another contented I’ve seen with this setup is, occasionally, 2 pages get indexed arsenic one. I’ve seen this a fewer times erstwhile radical said they couldn’t get their leafage indexed. But I’ve recovered their contented indexed arsenic portion of different leafage that’s usually the erstwhile station from them. My mentation is that erstwhile Google resized the viewport to beryllium longer (more connected this later), it triggered the infinite scroll and loaded different nonfiction successful erstwhile it was rendering. In this case, what I urge is to artifact the JavaScript record that handles the infinite scrolling truthful the functionality can’t trigger. A batch of the JavaScript frameworks instrumentality attraction of a ton of modern show optimization for you. All of the accepted show champion practices inactive apply, but you get immoderate fancy caller options. Code splitting chunks the files into smaller files. Tree shaking breaks retired needed parts, truthful you’re not loading everything for each leafage similar you’d spot successful accepted monolithic setups. JavaScript setups done good are a happening of beauty. JavaScript setups that aren’t done good tin beryllium bloated and origin agelong load times. Check retired our Core Web Vitals guide for much astir website performance. JavaScript XHR requests devour crawl budget, and I mean they gobble it down. Unlike astir different resources that are cached, these get fetched unrecorded during the rendering process. Another absorbing item is that the rendering work tries not to fetch resources that don’t lend to the contented of the page. If it gets this wrong, you whitethorn beryllium missing immoderate content. While Google historically says that it rejects work workers and work workers can’t edit the DOM, Google’s ain Martin Splitt indicated that you whitethorn get distant with utilizing web workers sometimes. So this is an absorbing one. It’s not consecutive forward: It turns retired web workers are supported BUT rendering doesn’t look to hold connected deferred work. (setTimeout for instance). So arsenic agelong arsenic you docket your enactment immediately, you’ll beryllium fine. 1/2 Googlebot supports HTTP requests but doesn’t enactment different transportation types similar WebSockets oregon WebRTC. If you’re utilizing those, supply a fallback that uses HTTP connections. One “gotcha” with JavaScript sites is they tin bash partial updates of the DOM. Browsing to different leafage arsenic a idiosyncratic whitethorn not update immoderate aspects similar rubric tags oregon canonical tags successful the DOM, but this whitethorn not beryllium an contented for hunt engines. Google loads each leafage stateless similar it’s a caller load. It’s not redeeming erstwhile accusation and not navigating betwixt pages. I’ve seen SEOs get tripped up reasoning determination is simply a occupation due to the fact that of what they spot aft navigating from 1 leafage to another, specified arsenic a canonical tag that doesn’t update. But Google whitethorn ne'er spot this state. Devs tin hole this by updating the authorities utilizing what’s called the History API. But again, it whitethorn not beryllium a problem. A batch of time, it’s conscionable SEOs making occupation for the developers due to the fact that it looks weird to them. Refresh the leafage and spot what you see. Or amended yet, tally it done 1 of Google’s investigating tools to spot what it sees. Speaking of its investigating tools, let’s speech about those. Google has respective investigating tools that are utile for JavaScript. This should beryllium your root of truth. When you inspect a URL, you’ll get a batch of info astir what Google saw and the existent rendered HTML from its system. You person the enactment to tally a unrecorded trial as well. There are immoderate differences betwixt the main renderer and the unrecorded test. The renderer uses cached resources and is reasonably patient. The unrecorded trial and different investigating tools usage unrecorded resources, and they chopped disconnected rendering aboriginal due to the fact that you’re waiting for a result. I’ll spell into much item astir this successful the rendering conception later. The screenshots successful these tools besides amusement pages with the pixels painted, which Google doesn’t really bash erstwhile rendering a page. The tools are utile to spot if contented is DOM-loaded. The HTML shown successful these tools is the rendered DOM. You tin hunt for a snippet of substance to spot if it was loaded successful by default. The tools volition besides amusement you resources that whitethorn beryllium blocked and console mistake messages, which are utile for debugging. If you don’t person entree to the Google Search Console spot for a website, you tin inactive tally a unrecorded trial connected it. If you adhd a redirect connected your ain website connected a spot wherever you person Google Search Console access, past you tin inspect that URL and the inspection instrumentality volition travel the redirect and amusement you the unrecorded trial effect for the leafage connected the different domain. In the screenshot below, I added a redirect from my tract to Google’s homepage. The unrecorded trial for this follows the redirect and shows maine Google’s homepage. I bash not really person entree to Google’s Google Search Console account, though I privation I did. The Rich Results Test tool allows you to cheque your rendered leafage arsenic Googlebot would spot it for mobile oregon for desktop. You tin inactive usage the Mobile-Friendly Test tool for now, but Google has announced it is shutting down successful December 2023. It has the aforesaid quirks arsenic the different investigating tools from Google. Ahrefs is the lone large SEO instrumentality that renders webpages erstwhile crawling the web, truthful we person information from JavaScript sites that nary different instrumentality does. We render ~200M pages a day, but that’s a fraction of what we crawl. It allows america to cheque for JavaScript redirects. We tin besides amusement links we recovered inserted with JavaScript, which we amusement with a JS tag successful the nexus reports: In the drop-down paper for pages successful Site Explorer, we besides person an inspect enactment that lets you spot the past of a leafage and comparison it to different crawls. We person a JS marker determination for pages that were rendered with JavaScript enabled. You tin alteration JavaScript in Site Audit crawls to unlock much information successful your audits. If you person JavaScript rendering enabled, we volition supply the earthy and rendered HTML for each page. Use the “magnifying glass” enactment adjacent to a leafage successful Page Explorer and spell to “View source” successful the menu. You tin besides comparison against erstwhile crawls and hunt wrong the earthy oregon rendered HTML crossed each pages connected the site. If you tally a crawl without JavaScript and past different 1 with it, you tin usage our crawl examination features to spot differences betwixt the versions. Ahrefs’ SEO Toolbar besides supports JavaScript and allows you to comparison HTML to rendered versions of tags. When you right-click successful a browser window, you’ll spot a mates of options for viewing the root codification of the leafage and for inspecting the page. View root is going to amusement you the aforesaid arsenic a GET petition would. This is the earthy HTML of the page. Inspect shows you the processed DOM aft changes person been made and is person to the contented that Googlebot sees. It’s the leafage aft JavaScript has tally and made changes to it. You should mostly usage inspect implicit presumption root erstwhile moving with JavaScript. Because Google looks astatine some earthy and rendered HTML for immoderate issues, you whitethorn inactive request to cheque presumption root astatine times. For instance, if Google’s tools are telling you the leafage is marked noindex, but you don’t spot a noindex tag successful the rendered HTML, it’s imaginable that it was determination successful the earthy HTML and overwritten. For things similar noindex, nofollow, and canonical tags, you whitethorn request to cheque the earthy HTML since issues tin transportation over. Remember that Google volition instrumentality the astir restrictive statements it saw for the meta robots tags, and it’ll disregard canonical tags erstwhile you amusement it aggregate canonical tags. I’ve seen this recommended mode excessively galore times. Google renders JavaScript, truthful what you spot without JavaScript is not astatine each similar what Google sees. This is just silly. Google’s cache is not a reliable mode to cheque what Googlebot sees. What you typically spot successful the cache is the earthy HTML snapshot. Your browser past fires the JavaScript that is referenced successful the HTML. It’s not what Google saw erstwhile it rendered the page. To complicate this further, websites whitethorn person their Cross-Origin Resource Sharing (CORS) argumentation acceptable up successful a mode that the required resources can’t beryllium loaded from a antithetic domain. The cache is hosted connected webcache.googleusercontent.com. When that domain tries to petition the resources from the existent domain, the CORS argumentation says, “Nope, you can’t entree my files.” Then the files aren’t loaded, and the leafage looks breached successful the cache. The cache strategy was made to spot the contented erstwhile a website is down. It’s not peculiarly utile arsenic a debug tool. In the aboriginal days of hunt engines, a downloaded HTML effect was capable to spot the contented of astir pages. Thanks to the emergence of JavaScript, hunt engines present request to render galore pages arsenic a browser would truthful they tin spot contented arsenic however a idiosyncratic sees it. The strategy that handles the rendering process astatine Google is called the Web Rendering Service (WRS). Google has provided a simplistic diagram to screen however this process works. Let’s accidental we commencement the process at URL. The crawler sends GET requests to the server. The server responds with headers and the contents of the file, which past gets saved. The headers and the contented typically travel successful the aforesaid request. The petition is apt to travel from a mobile user-agent since Google is on mobile-first indexing now, but it besides inactive crawls with the desktop user-agent. The requests mostly travel from Mountain View (CA, U.S.), but it besides does immoderate crawling for locale-adaptive pages extracurricular of the U.S. As I mentioned earlier, this tin origin issues if sites are blocking oregon treating visitors successful a circumstantial state successful antithetic ways. It’s besides important to enactment that portion Google states the output of the crawling process arsenic “HTML” connected the representation above, successful reality, it’s crawling and storing the resources needed to physique the leafage similar the HTML, JavaScript files, and CSS files. There’s besides a 15 MB max size bounds for HTML files. There are a batch of systems obfuscated by the word “Processing” successful the image. I’m going to screen a fewer of these that are applicable to JavaScript. Google does not navigate from leafage to leafage arsenic a idiosyncratic would. Part of “Processing” is to cheque the leafage for links to different pages and files needed to physique the page. These links are pulled retired and added to the crawl queue, which is what Google is utilizing to prioritize and docket crawling. Google volition propulsion assets links (CSS, JS, etc.) needed to physique a leafage from things similar <link> tags. As I mentioned earlier, internal links added with JavaScript volition not get picked up until aft rendering. That should beryllium comparatively speedy and not a origin for interest successful astir cases. Things similar quality sites whitethorn beryllium the objection wherever each 2nd counts. Every record that Google downloads, including HTML pages, JavaScript files, CSS files, etc., is going to beryllium aggressively cached. Google volition disregard your cache timings and fetch a caller transcript erstwhile it wants to. I’ll speech a spot much astir this and wherefore it’s important successful the “Renderer” section. Duplicate contented whitethorn beryllium eliminated oregon deprioritized from the downloaded HTML earlier it gets sent to rendering. I already talked astir this successful the “Duplicate content” conception above. As I mentioned earlier, Google volition take the astir restrictive statements betwixt HTML and the rendered mentation of a page. If JavaScript changes a connection and that conflicts with the connection from HTML, Google volition simply obey whichever is the astir restrictive. Noindex volition override index, and noindex successful HTML volition skip rendering altogether. One of the biggest concerns from galore SEOs with JavaScript and two-stage indexing (HTML past rendered page) is that pages whitethorn not get rendered for days oregon adjacent weeks. When Google looked into this, it found pages went to the renderer astatine a median clip of 5 seconds, and the 90th percentile was minutes. So the magnitude of clip betwixt getting the HTML and rendering the pages should not beryllium a interest successful most cases. However, Google doesn’t render each pages. Like I mentioned previously, a leafage with a robots meta tag oregon header containing a noindex tag volition not beryllium sent to the renderer. It won’t discarded resources rendering a leafage it can’t scale anyway. It besides has prime checks successful this process. If it looks astatine the HTML oregon tin reasonably find from different signals oregon patterns that a leafage isn’t bully capable prime to index, past it won’t fuss sending that to the renderer. There’s besides a quirk with quality sites. Google wants to scale pages connected quality sites accelerated truthful it tin scale the pages based connected the HTML contented first—and travel backmost aboriginal to render these pages. The renderer is wherever Google renders a leafage to spot what a idiosyncratic sees. This is wherever it’s going to process the JavaScript and immoderate changes made by JavaScript to the DOM. For this, Google is utilizing a headless Chrome browser that is present “evergreen,” which means it should usage the latest Chrome mentation and enactment the latest features. Years ago, Google was rendering with Chrome 41, and galore features were not supported astatine that time. Google has more info connected the WRS, which includes things similar denying permissions, being stateless, flattening airy DOM and shadiness DOM, and much that is worthy reading. Rendering astatine web-scale whitethorn beryllium the eighth wonderment of the world. It’s a superior undertaking and takes a tremendous magnitude of resources. Because of the scale, Google is taking galore shortcuts with the rendering process to velocity things up. Google is relying heavy connected caching resources. Pages are cached. Files are cached. Nearly everything is cached earlier being sent to the renderer. It’s not going retired and downloading each assets for each leafage load, due to the fact that that would beryllium costly for it and website owners. Instead, it uses these cached resources to beryllium much efficient. The objection to that is XHR requests, which the renderer volition bash successful real time. A communal SEO story is that Google lone waits 5 seconds to load your page. While it’s ever a bully thought to marque your tract faster, this story doesn’t truly marque consciousness with the mode Google caches files mentioned above. It’s already loading a leafage with everything cached successful its systems, not making requests for caller resources. If it lone waited 5 seconds, it would miss a batch of content. The story apt comes from the investigating tools similar the URL Inspection instrumentality wherever resources are fetched unrecorded alternatively of cached, and they request to instrumentality a effect to users wrong a tenable magnitude of time. It could besides travel from pages not being prioritized for crawling, which makes radical deliberation they’re waiting a agelong clip to render and index them. There is nary fixed timeout for the renderer. It runs with a sped-up timer to spot if thing is added astatine a aboriginal time. It besides looks astatine the lawsuit loop successful the browser to spot erstwhile each of the actions person been taken. It’s truly patient, and you should not beryllium acrophobic astir immoderate circumstantial time limit. It is patient, but it besides has safeguards successful spot successful lawsuit thing gets stuck oregon idiosyncratic is trying to excavation Bitcoin connected its pages. Yes, it’s a thing. We had to adhd safeguards for Bitcoin mining arsenic good and adjacent published a study about it. Googlebot doesn’t instrumentality enactment connected webpages. It’s not going to click things oregon scroll, but that doesn’t mean it doesn’t person workarounds. As agelong arsenic contented is loaded successful the DOM without a needed action, Google volition spot it. If it’s not loaded into the DOM until aft a click, past the contented won’t be found. Google doesn’t request to scroll to spot your contented either due to the fact that it has a clever workaround to spot the content. For mobile, it loads the leafage with a surface size of 411x731 pixels and resizes the magnitude to 12,140 pixels. Essentially, it becomes a truly agelong telephone with a surface size of 411x12140 pixels. For desktop, it does the aforesaid and goes from 1024x768 pixels to 1024x9307 pixels. I haven’t seen immoderate caller tests for these numbers, and it whitethorn alteration depending connected however agelong the pages are. Another absorbing shortcut is that Google doesn’t overgarment the pixels during the rendering process. It takes clip and further resources to decorativeness a leafage load, and it doesn’t truly request to spot the last authorities with the pixels painted. Besides, graphics cards are costly betwixt gaming, crypto mining, and AI. Google conscionable needs to cognize the operation and the layout, and it gets that without having to really overgarment the pixels. As Martin puts it: In Google hunt we don’t truly attraction astir the pixels due to the fact that we don’t truly privation to amusement it to someone. We privation to process the accusation and the semantic accusation truthful we request thing successful the intermediate state. We don’t person to really overgarment the pixels. A ocular whitethorn assistance explicate what is chopped retired a spot better. In Chrome Dev Tools, if you tally a trial connected the “Performance” tab, you get a loading chart. The coagulated greenish portion present represents the coating stage. For Googlebot, that ne'er happens, truthful it saves resources. Gray = Downloads Google has a assets that talks a spot astir crawl budget. But you should cognize that each tract has its ain crawl budget, and each petition has to beryllium prioritized. Google besides has to equilibrium crawling your pages vs. each different leafage connected the internet. Newer sites successful wide oregon sites with a batch of dynamic pages volition apt beryllium crawled slower. Some pages volition beryllium updated little often than others, and immoderate resources whitethorn besides beryllium requested little frequently. There are tons of options erstwhile it comes to rendering JavaScript. Google has a coagulated illustration that I’m conscionable going to show. Any benignant of SSR, static rendering, and prerendering setup is going to beryllium good for hunt engines. Gatsby, Next, Nuxt, etc., are all great. The astir problematic 1 is going to beryllium afloat client-side rendering wherever each of the rendering happens successful the browser. While Google volition astir apt beryllium OK client-side rendering, it’s champion to take a antithetic rendering enactment to enactment other hunt engines. Bing besides has enactment for JavaScript rendering, but the standard is unknown. Yandex and Baidu person constricted enactment from what I’ve seen, and galore different hunt engines person small to nary enactment for JavaScript. Our ain hunt engine, Yep, has support, and we render ~200M pages per day. But we don’t render each leafage we crawl. There’s besides the enactment of dynamic rendering, which is rendering for definite user-agents. This is simply a workaround and, to beryllium honest, I ne'er recommended it and americium gladsome Google is recommending against it present as well. Situationally, you whitethorn privation to usage it to render for definite bots similar hunt engines oregon adjacent societal media bots. Social media bots don’t tally JavaScript, truthful things like OG tags won’t beryllium seen unless you render the contented earlier serving it to them. Practically, it makes setups much analyzable and harder for SEOs to troubleshoot. It’s decidedly cloaking, adjacent though Google says it’s not and that it’s OK with it. Note If you were utilizing the old AJAX crawling scheme with hashbangs (#!), bash cognize this has been deprecated and is nary longer supported. JavaScript is not thing for SEOs to fear. Hopefully, this nonfiction has helped you recognize however to enactment with it better. Don’t beryllium acrophobic to scope retired to your developers and enactment with them and inquire them questions. They are going to beryllium your top allies successful helping to amended your JavaScript tract for hunt engines. Have questions? Let maine cognize on Twitter.Have unsocial rubric tags and meta descriptions

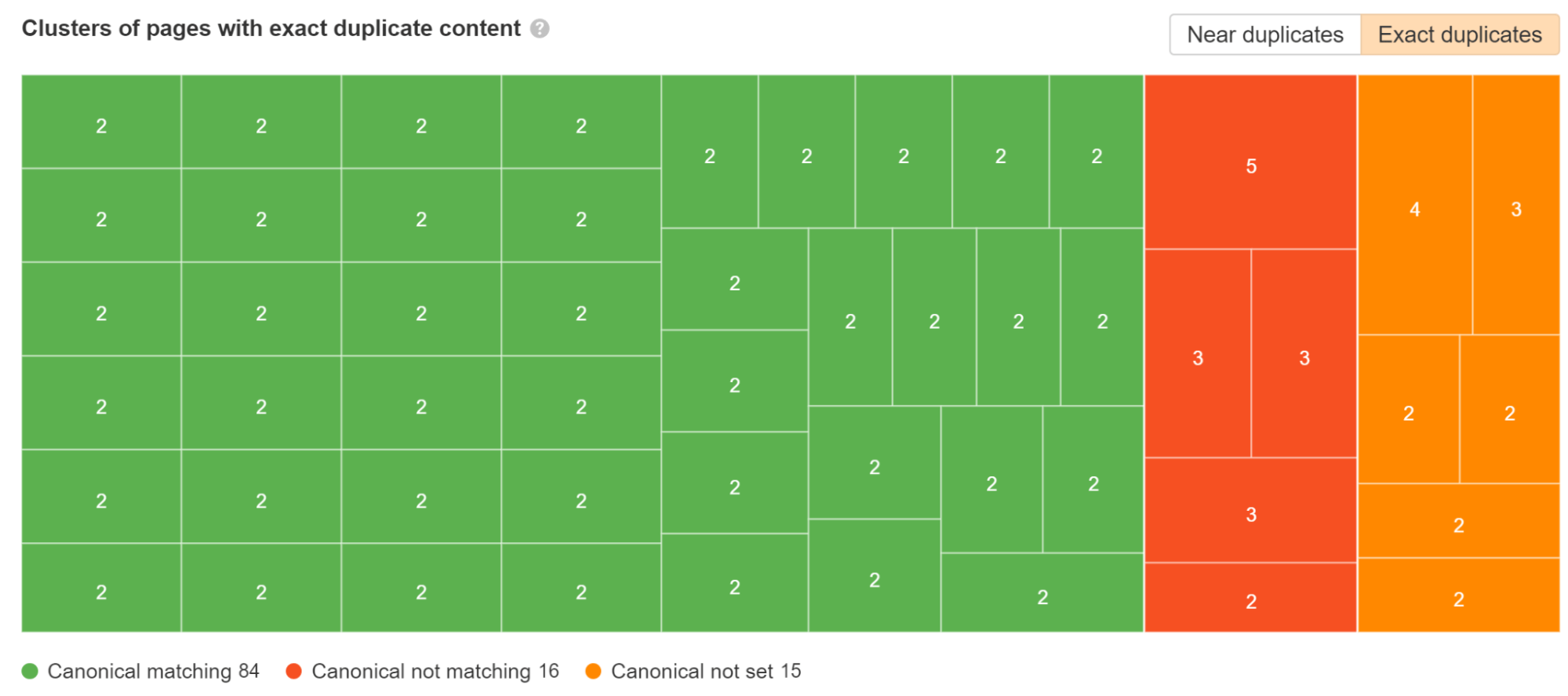

Canonical tag issues

Google uses the astir restrictive meta robots tag

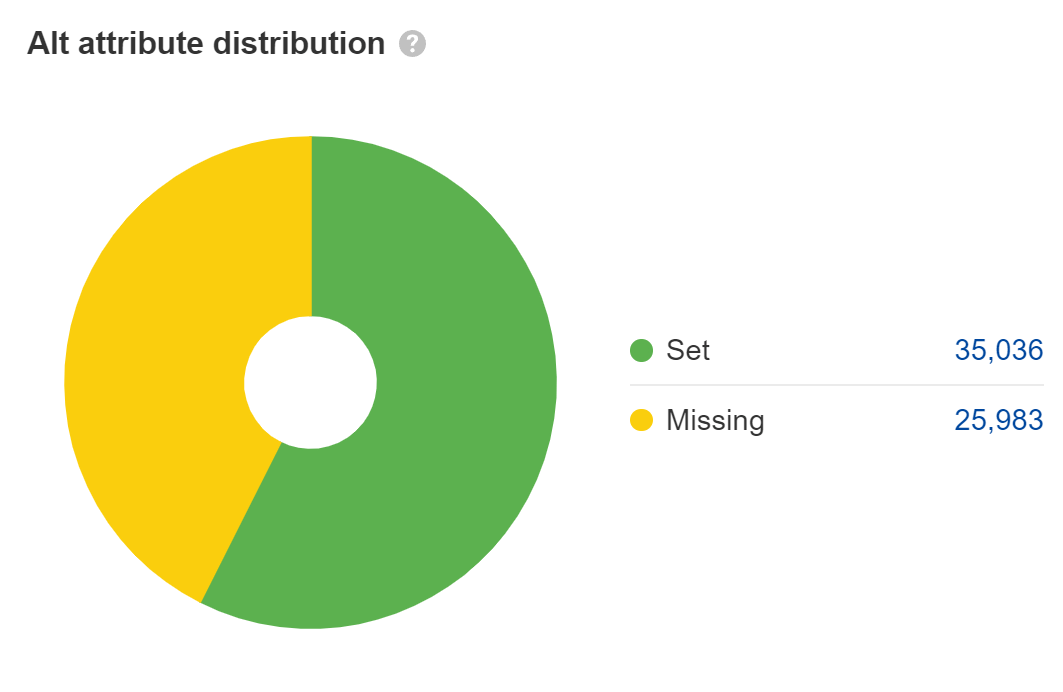

Set alt attributes connected images

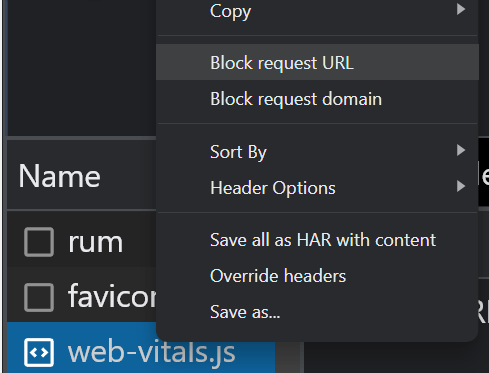

Allow crawling of JavaScript files

Allow: .js

Allow: .css

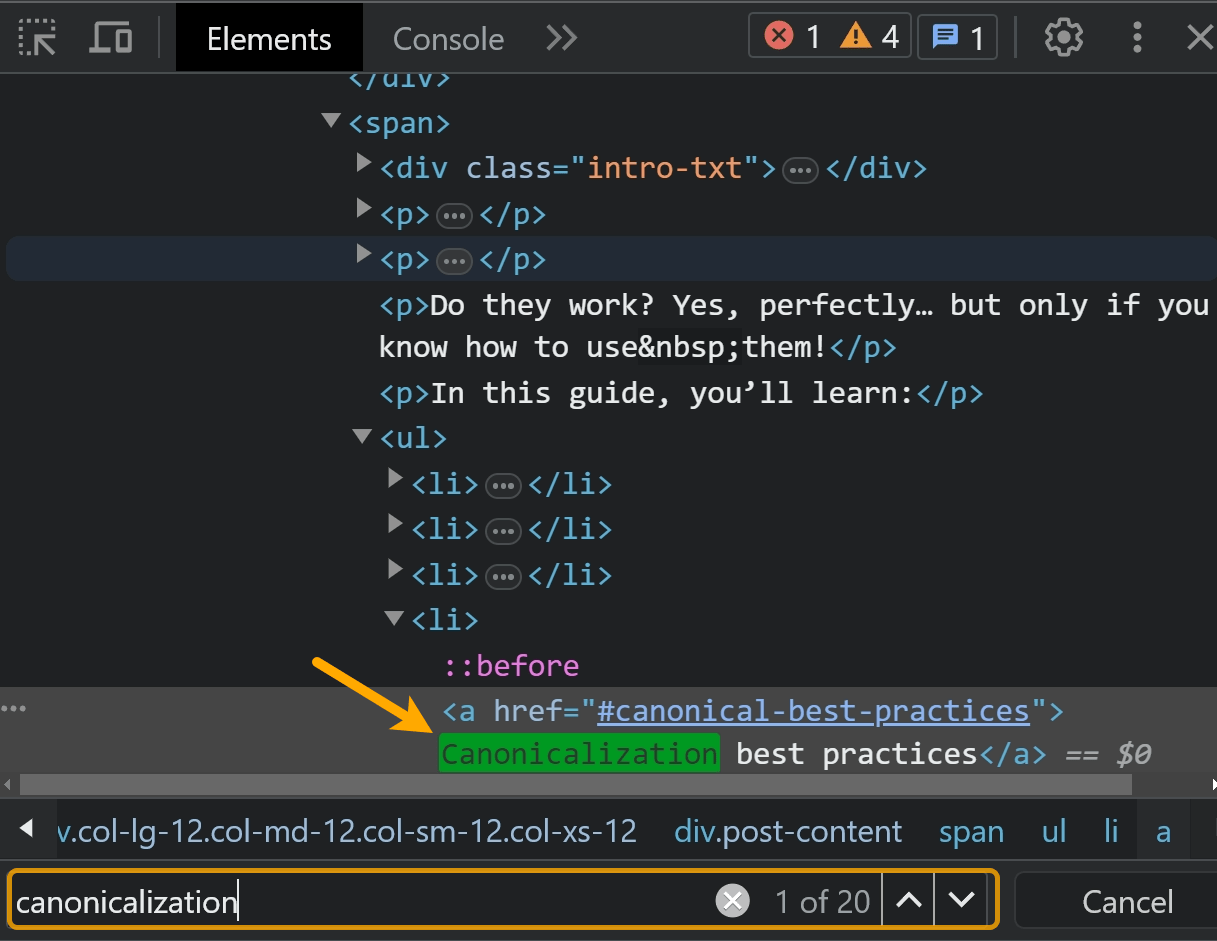

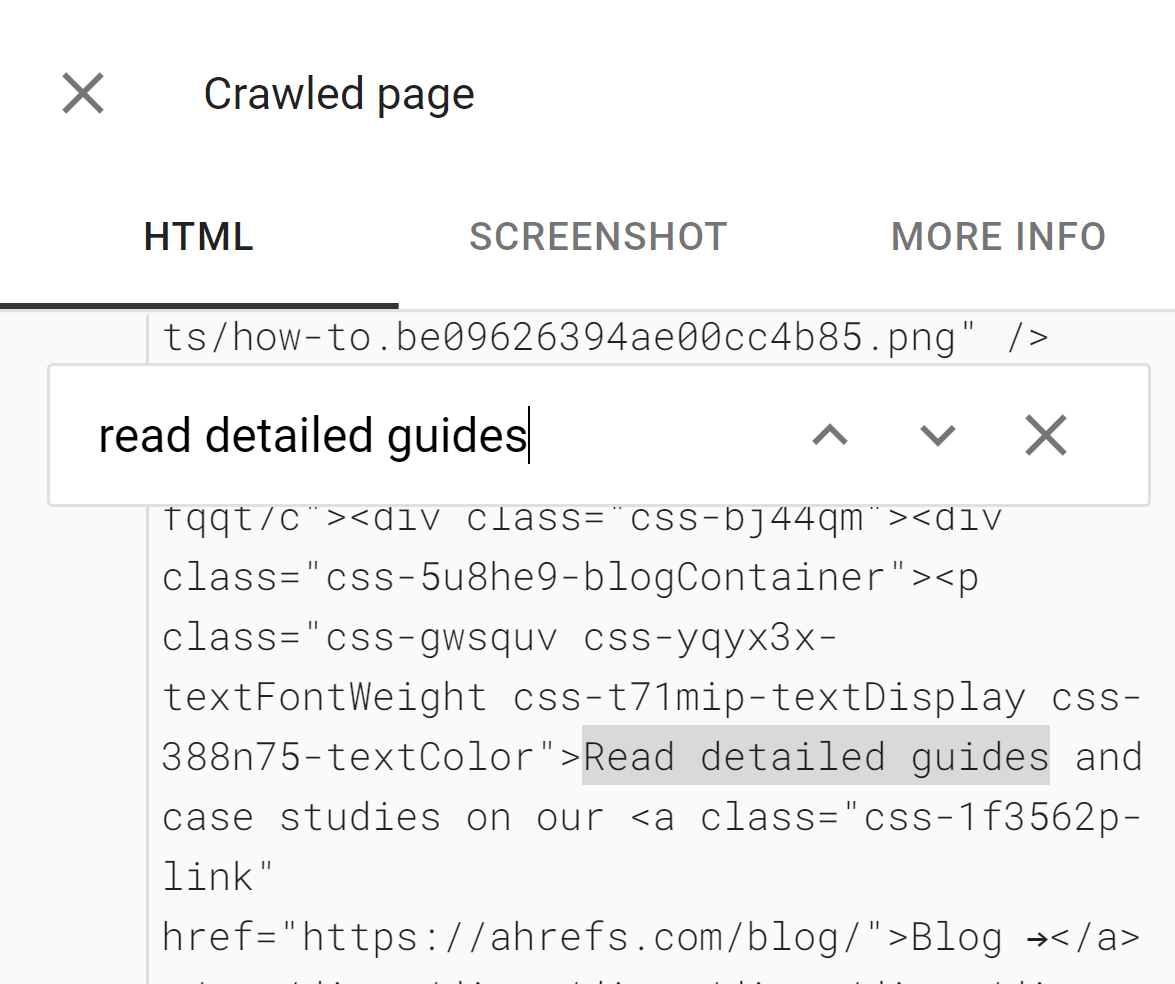

Check if Google sees your content

Duplicate contented issues

domain.com/abc

domain.com/123

domain.com/?id=123

Don’t usage fragments (#) in URLs

Use ‘History’ Mode alternatively of the accepted ‘Hash’ Mode.

mode: ‘history’,

router: [] //the array of router links

)}Create a sitemap

Status codes and soft 404s

JavaScript redirects are OK, but not preferred

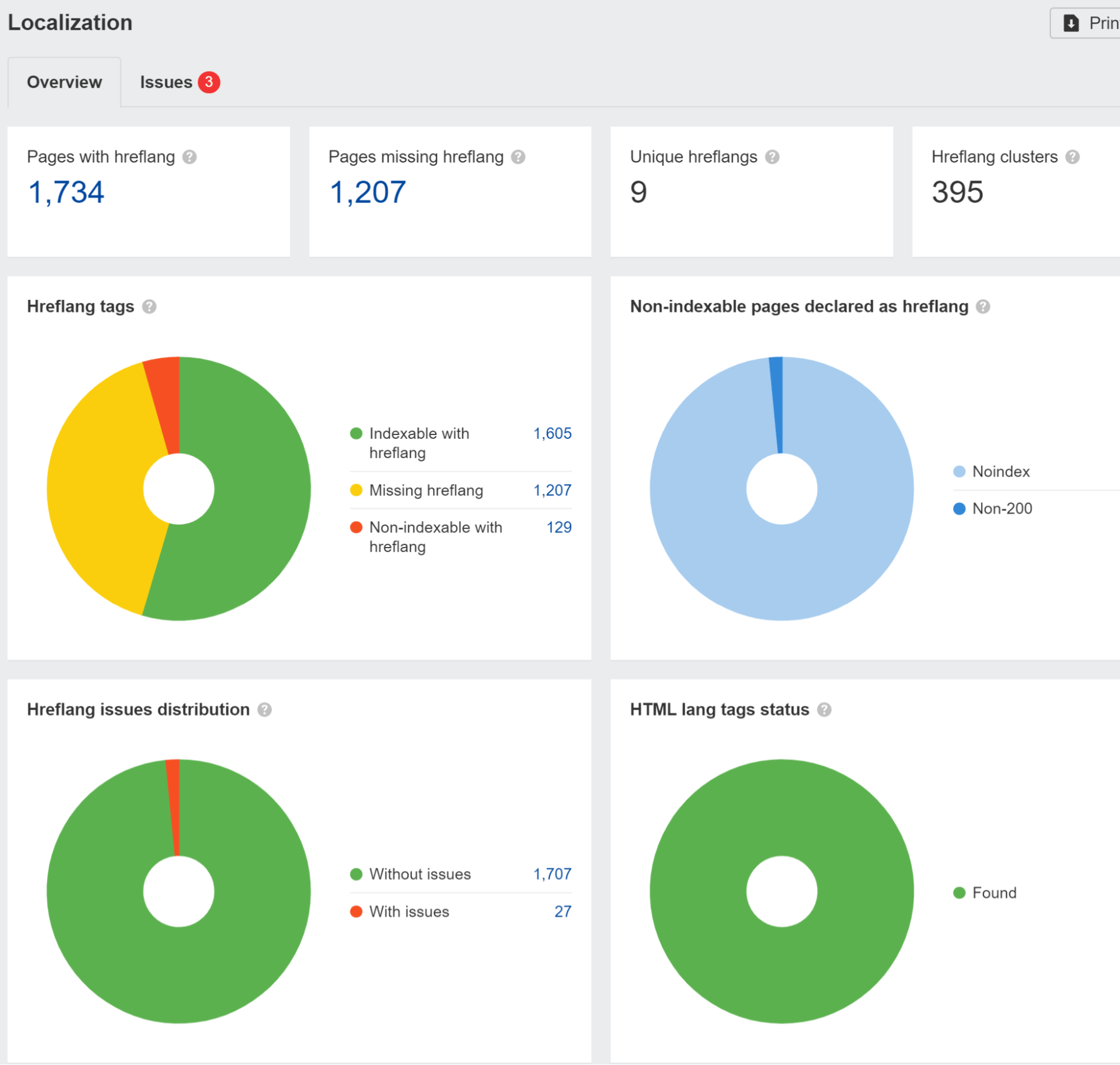

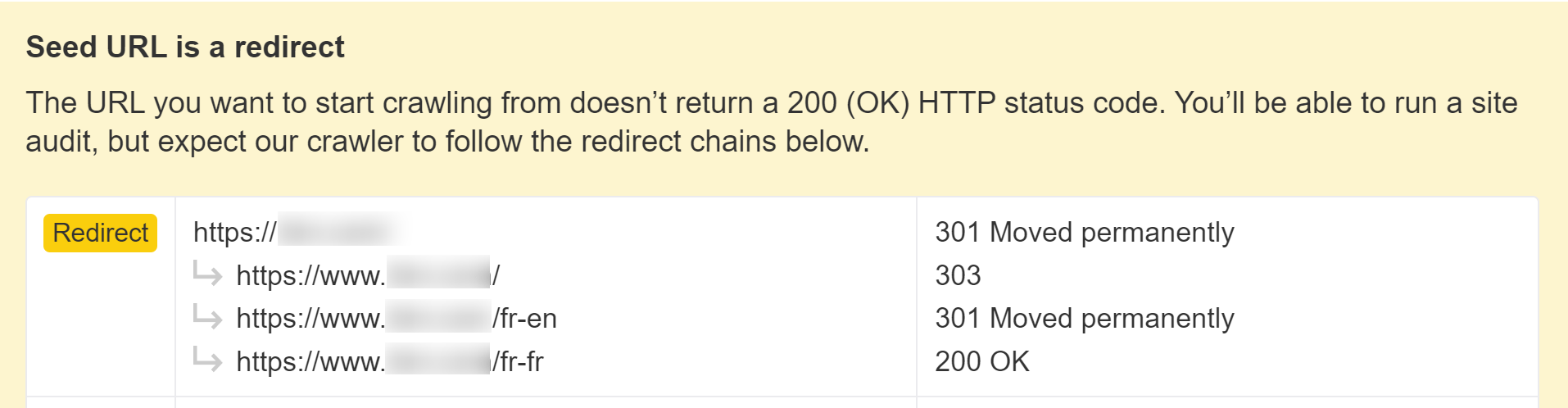

Internationalization issues

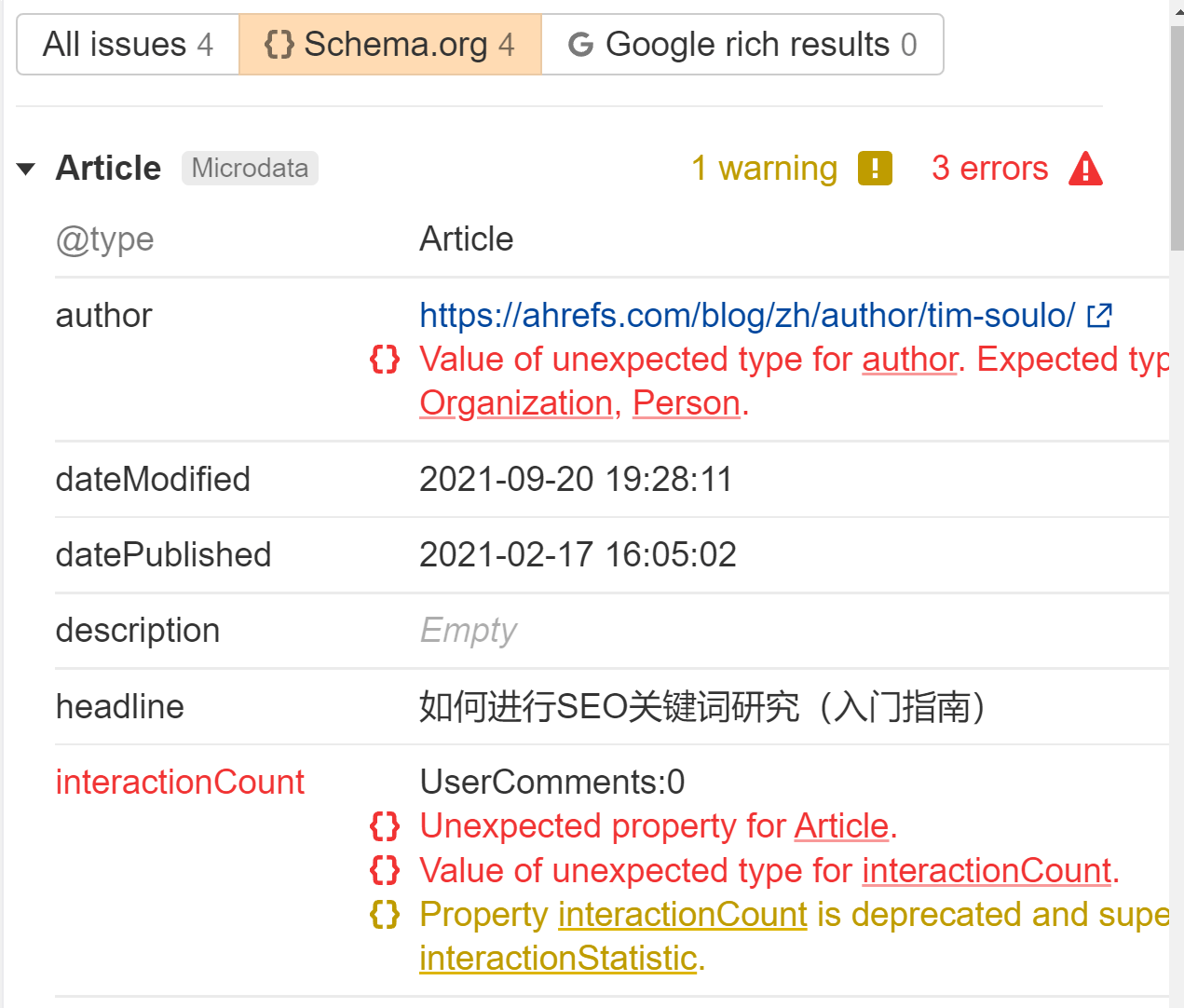

Use structured data

Use modular format links

Use record versioning to lick for intolerable states being indexed

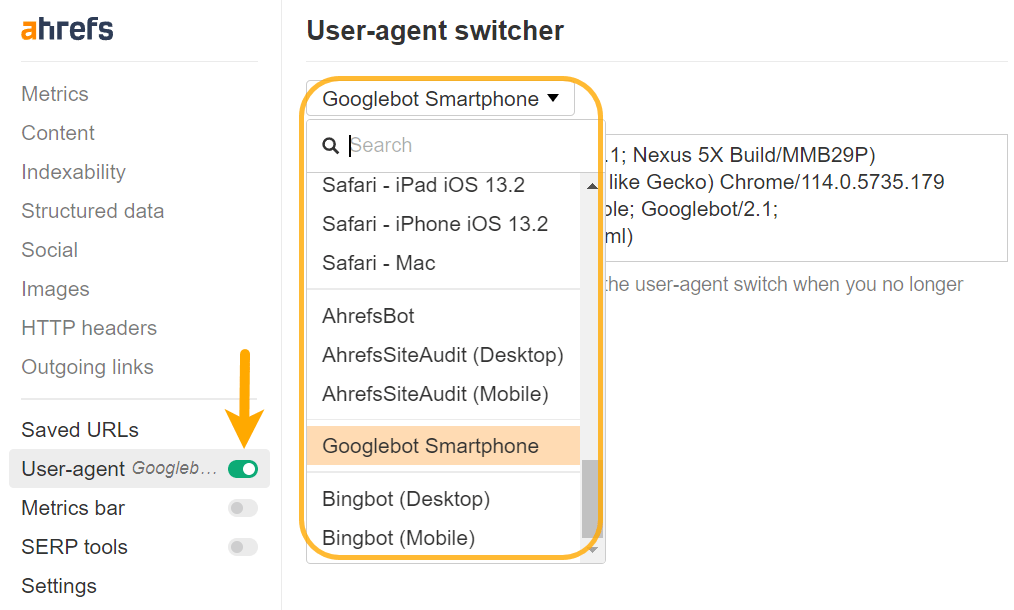

You whitethorn not spot what is shown to Googlebot

Use polyfills for unsupported features

Use lazy loading

Infinite scroll issues

Performance issues

JavaScript sites usage much crawl budget

Workers aren’t supported, oregon are they?

Use HTTP connections

Google investigating tools

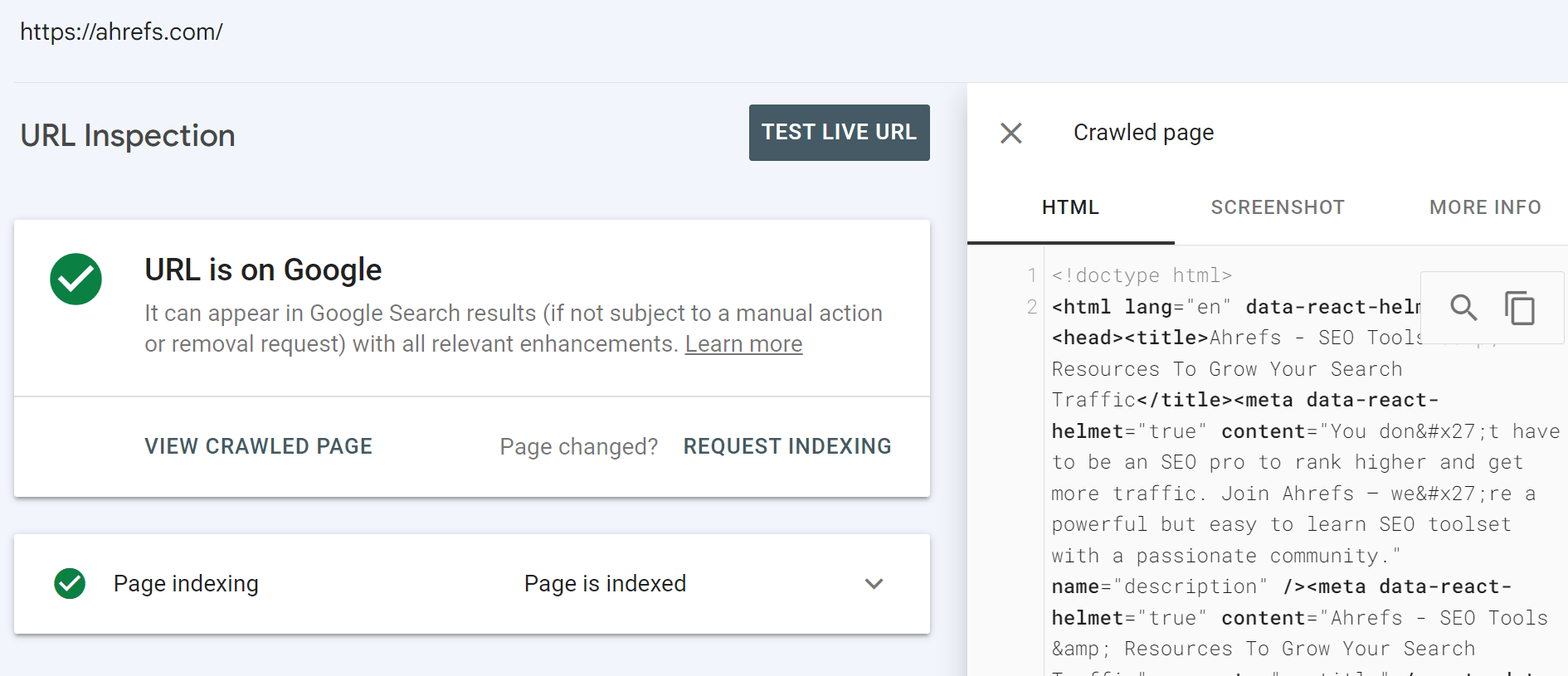

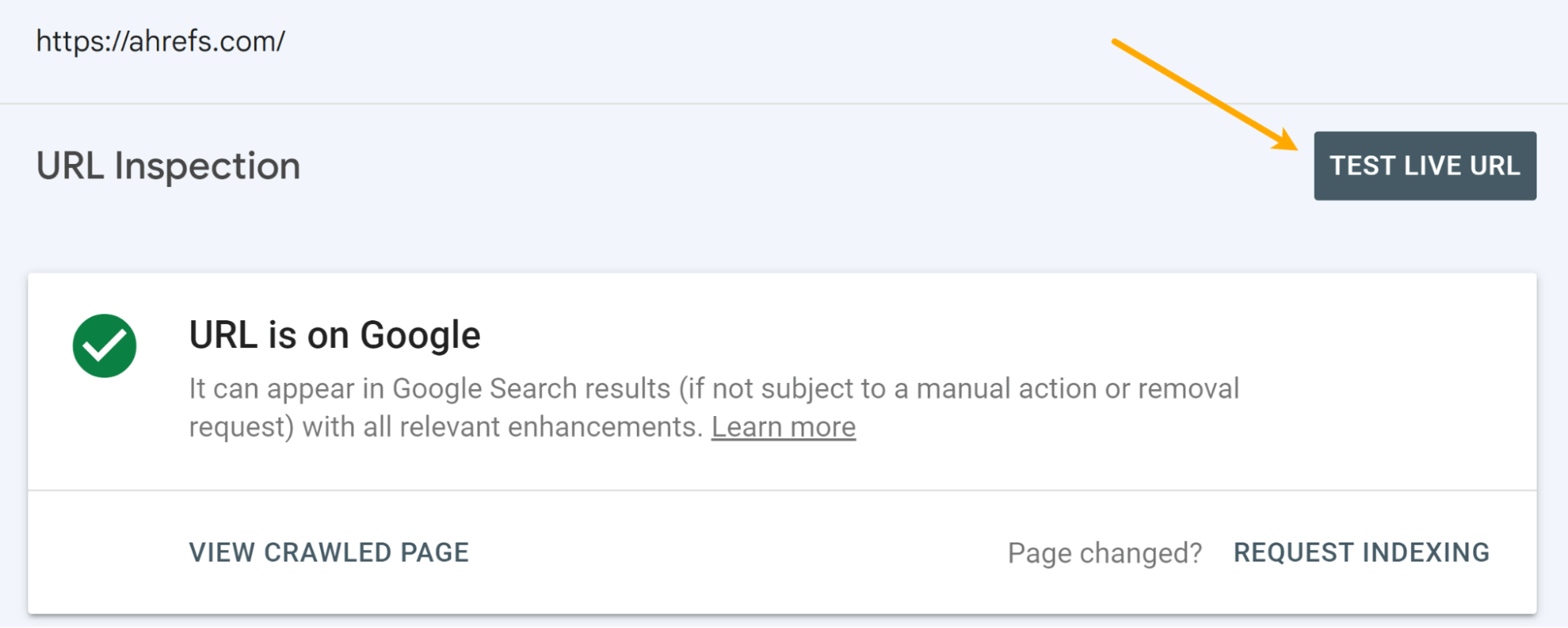

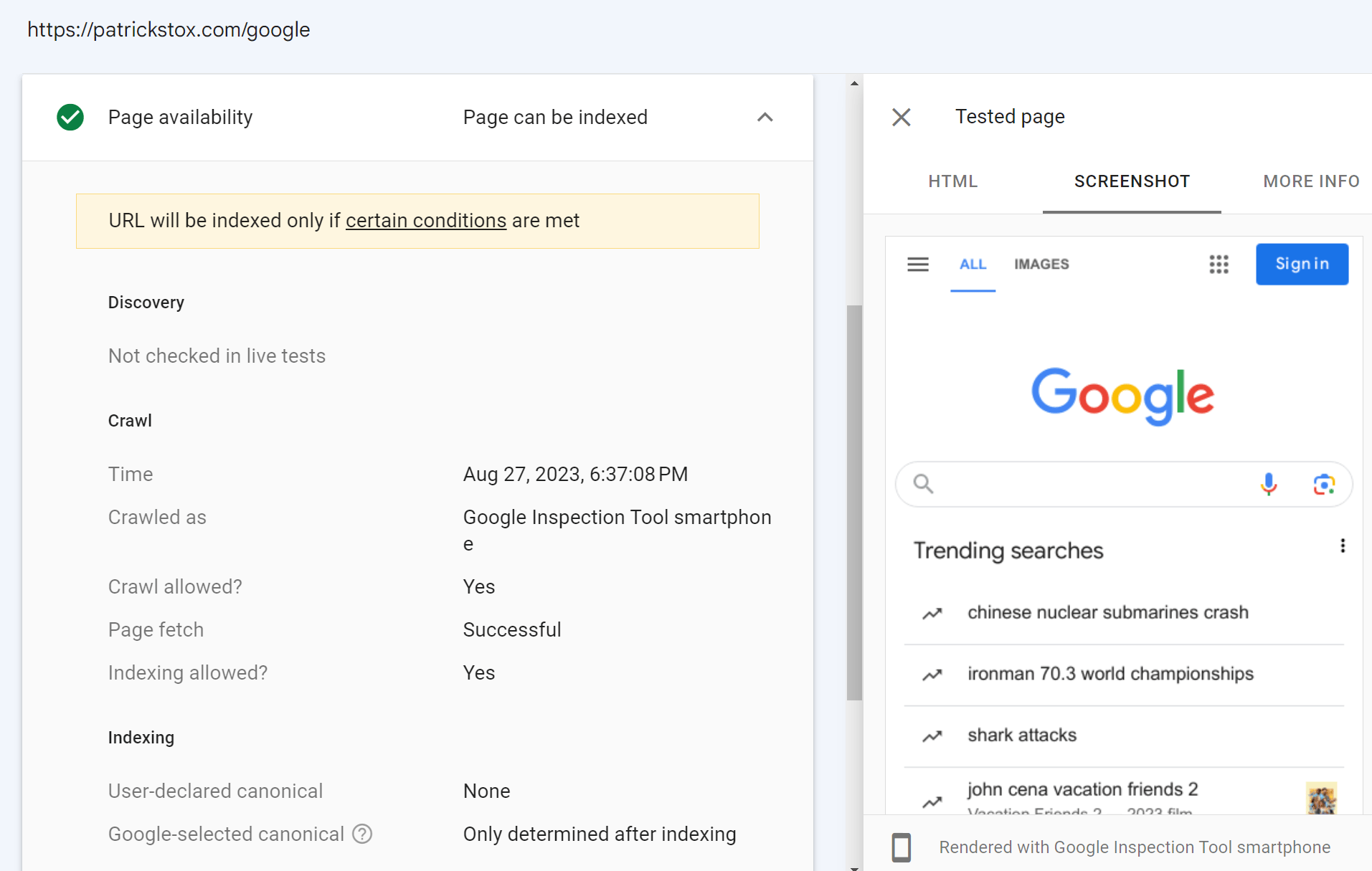

URL Inspection instrumentality successful Google Search Console

Rich Results Test tool

Mobile-Friendly Test tool

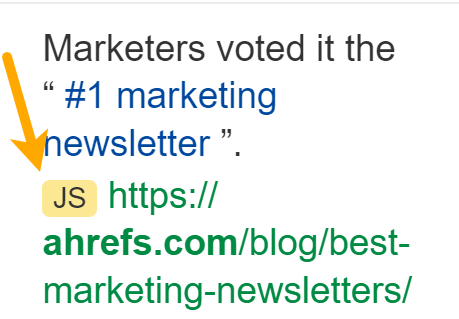

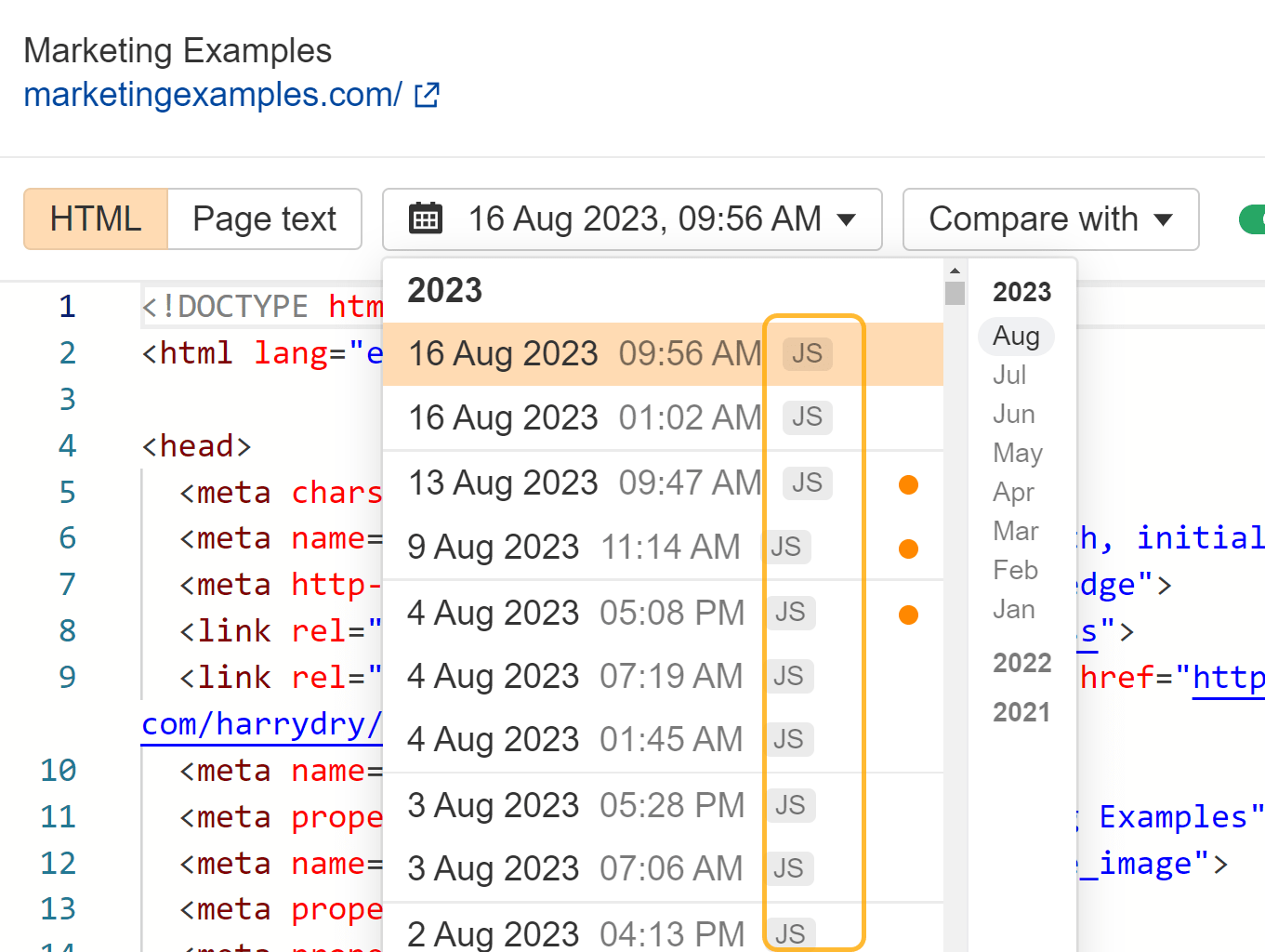

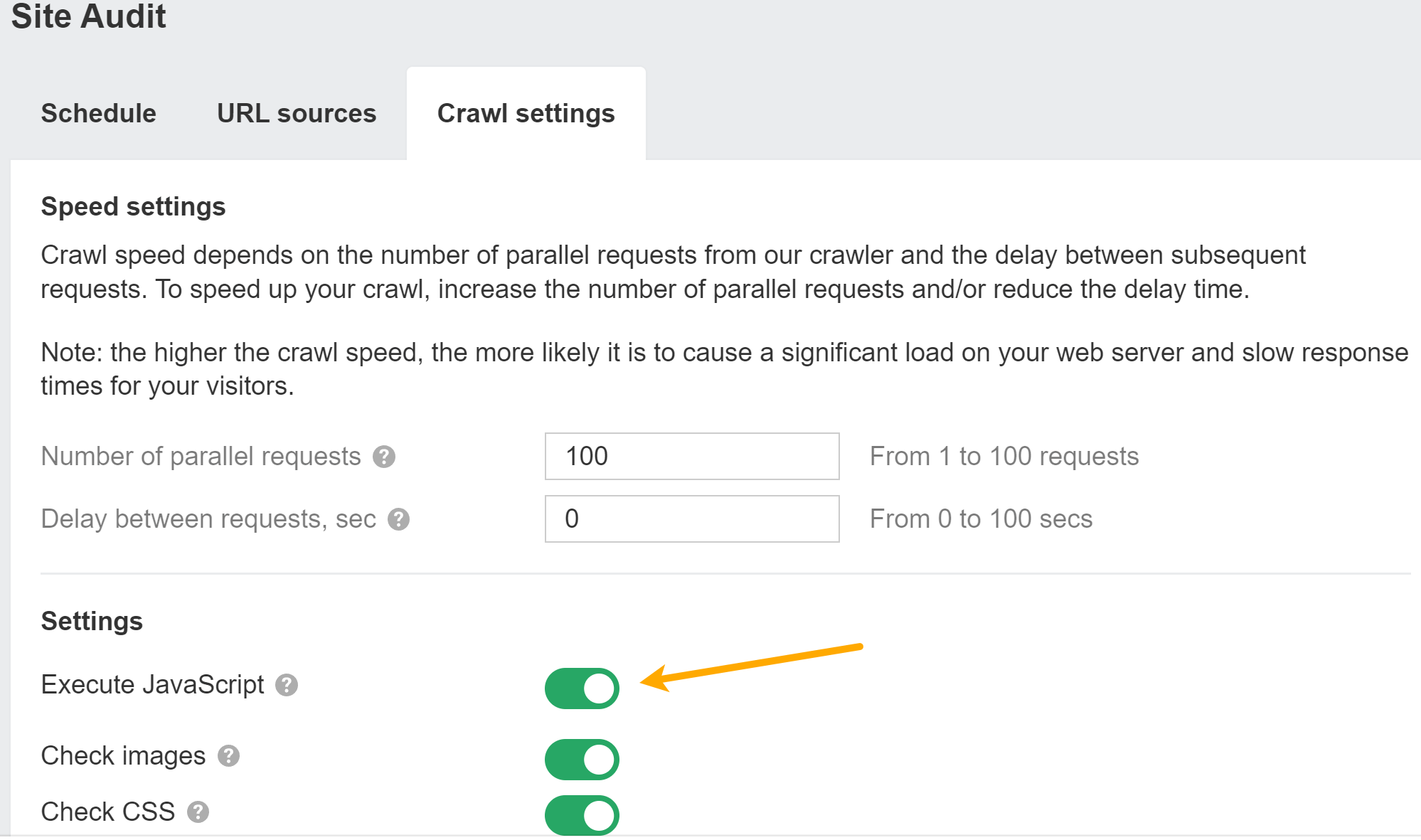

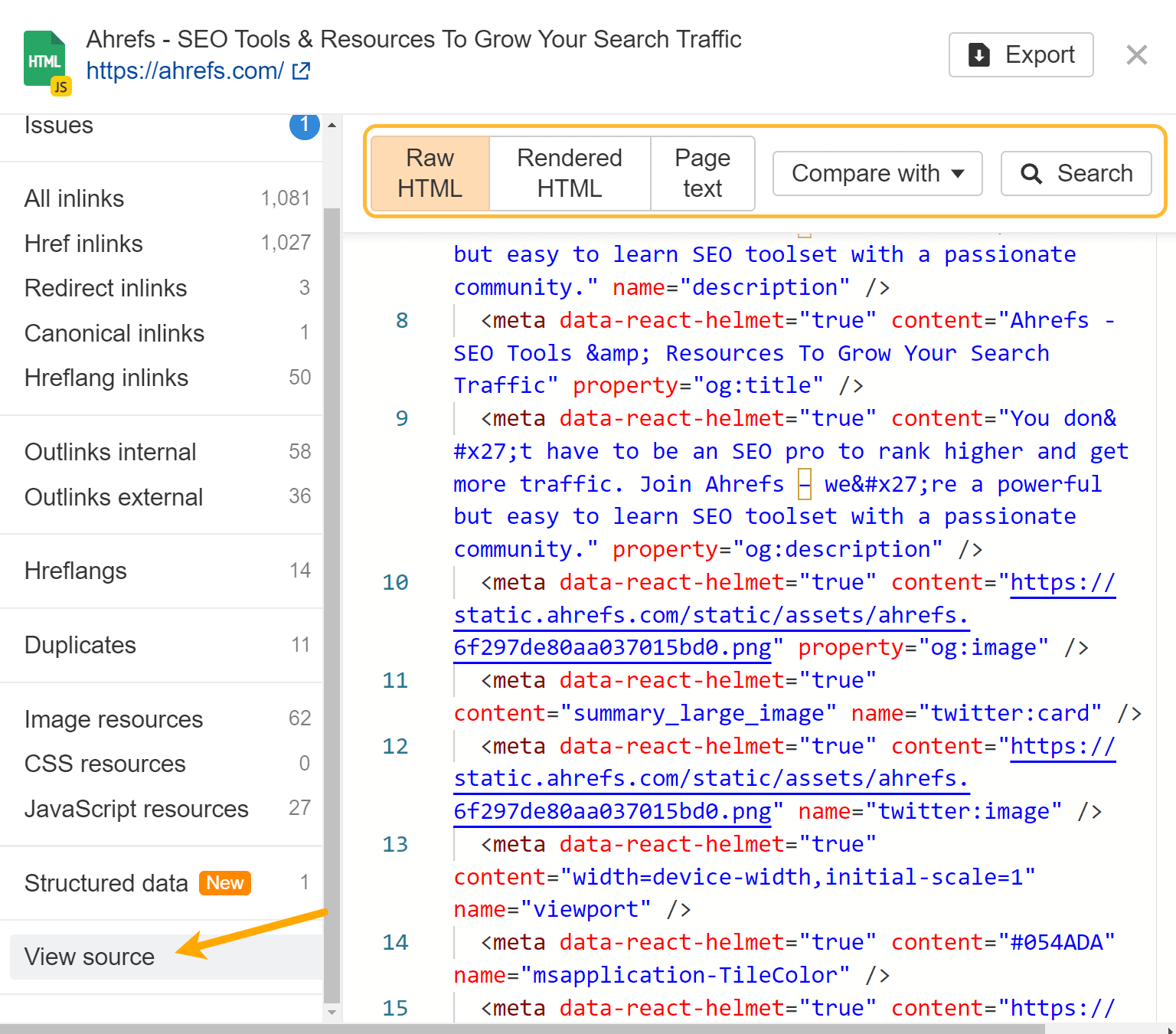

Ahrefs

View root vs. inspect

Sometimes you request to cheque presumption source

Don’t browse with JavaScript turned off

Don’t usage Google Cache

Source: Google.

Source: Google.1. Crawler

2. Processing

Resources and links

Caching

Duplicate elimination

Most restrictive directives

3. Render queue

4. Renderer

Cached resources

There’s nary five-second timeout

What Googlebot sees

Blue = HTML

Yellow = JavaScript

Purple = Layout

Green = Painting5. Crawl queue

Source: web.dev.

Source: web.dev.Final thoughts